The Bottom Line: The Necessary Scaffolding for Autonomous AI

If you’ve heard the term “AI agent” recently, you’re not alone. It’s the biggest buzzword in tech, promising autonomous systems that can book flights, write complex code, or conduct deep research with a single prompt. But the reality is nuanced. The tools enabling this revolution aren’t just the AI models themselves (like Gemini 3 or GPT-5), but the AI agent frameworks that provide the scaffolding.

These frameworks—like LangChain, LlamaIndex, AutoGen, and CrewAI—are essential blueprints and toolkits for developers. They provide the structure for an AI to plan tasks, use tools (like web search or code interpreters), and remember past actions. While powerful, they are still maturing. Building reliable agents remains difficult, and debugging them can be incredibly complex. This guide is for developers and tech leaders who need to understand the landscape, compare the major players, and separate the hype from the current reality of autonomous AI in late 2025.

Click any section to jump directly to it

- 💥 Hype vs. Reality: What Can Agents Actually Do?

- 🧠 What AI Agent Frameworks Actually Do (The Car Metaphor)

- 🔧 The Anatomy of an AI Agent

- 🥊 The Big Four: Comparing Leading Frameworks

- 🚀 Getting Started: Building a Research Crew in 15 Minutes

- ✅ Use Cases That Work (And Those That Don’t)

- 👩💻 The Developer Experience: Pain Points and Progress

- 🔮 The Road Ahead: What’s Next in 2026?

- ⚖️ Final Verdict: Which Framework Should You Choose?

- ❓ FAQs: Your Questions Answered

💥 Hype vs. Reality: What Can Agents Actually Do?

In 2025, the promise of AI agents is everywhere. We see demos of AI coding assistants building entire applications from scratch. We hear about “autonomous research analysts” replacing hours of manual work. The implication is clear: AI is moving beyond the conversational chatbot interface and into active, goal-oriented tasks.

This shift is profound. A chatbot responds; an agent acts.

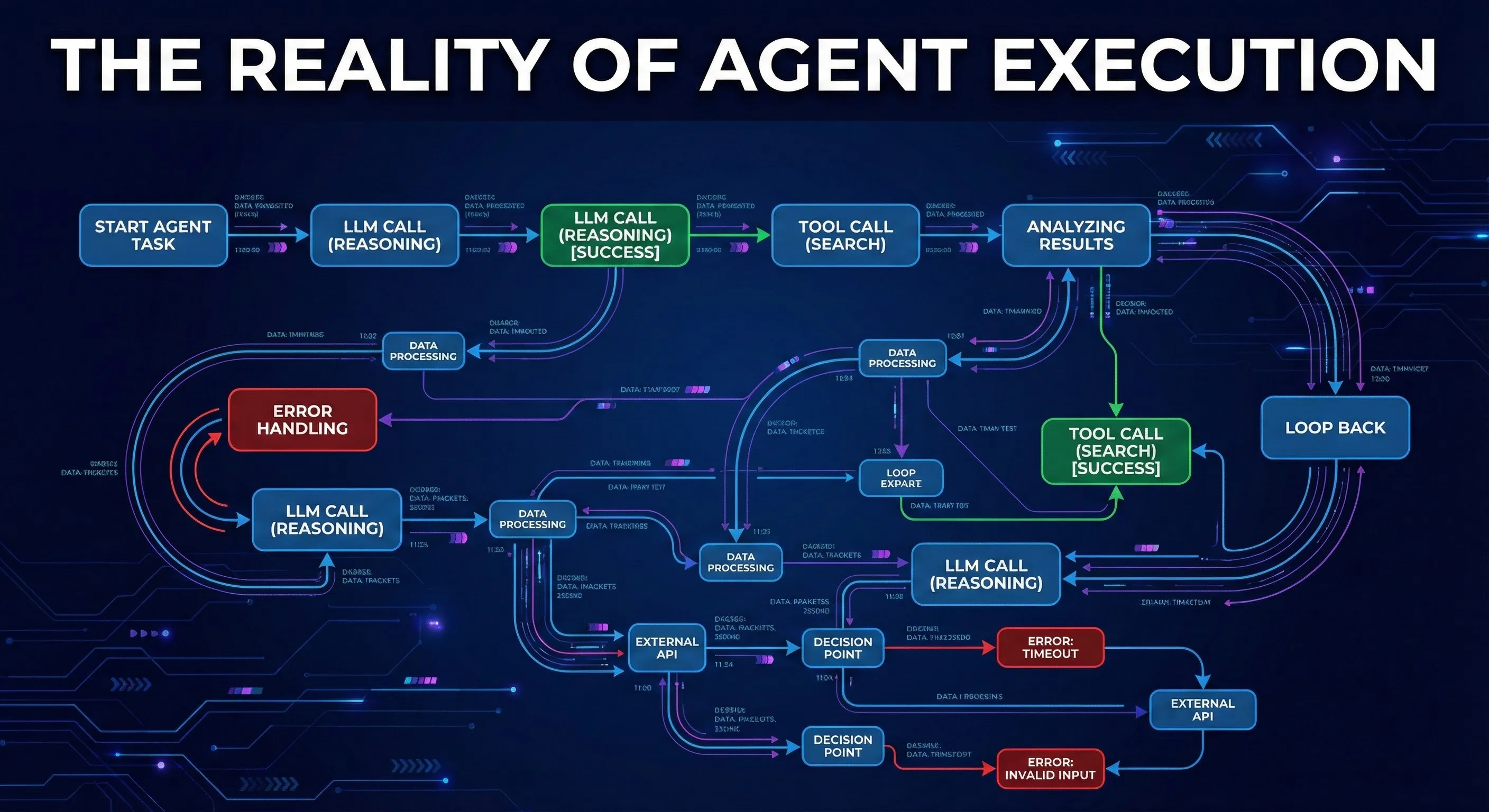

However, the hype train is running significantly ahead of the tracks. While the potential is massive, the current state of AI agent frameworks requires a heavy dose of realism. Many developers who jump in expecting to build a fully autonomous employee in a weekend find themselves bogged down in configuration hell, battling agents that get stuck in loops, or struggling to make the agent’s actions reliable enough for production use.

🔍 REALITY CHECK: The “Autonomous” Dream

Marketing Claims: “Build fully autonomous agents that can operate independently to achieve complex goals.”

Actual Experience: Most agents today operate at “Level 2” or “Level 3” autonomy (Partial or Conditional Autonomy). They require human oversight, often get stuck when encountering unexpected scenarios, and need their goals explicitly defined. True “Level 5” (Fully Autonomous) agents that can self-direct and handle ambiguity reliably are still largely theoretical in late 2025.

Verdict: Think of AI agents as incredibly capable interns, not seasoned executives. They need direction, guardrails, and review.

The truth is, building a useful AI agent is hard. It requires understanding not just the AI model, but how to structure its thought process, how to integrate external tools securely, and how to handle inevitable errors. That’s where the AI agent framework comes in.

🧠 What AI Agent Frameworks Actually Do (The Car Metaphor)

If a Large Language Model (LLM) like Claude or GPT is the “brain”—the reasoning engine—then the AI agent framework is the rest of the car: the steering wheel, the GPS, the transmission, and the brakes.

An LLM on its own is surprisingly limited. It can process information and generate text, but it can’t reliably:

- Browse the current internet for up-to-date information.

- Perform complex mathematics or symbolic reasoning.

- Remember information beyond its immediate context window.

- Execute code or interact with external APIs (like your CRM or email).

An AI agent framework solves these problems. It provides the necessary plumbing to turn a static LLM into a dynamic agent. The framework doesn’t do the thinking (that’s the LLM’s job), but it provides the structure, coordinates the tools, and manages the execution flow.

In short: You cannot build a useful AI agent without some sort of framework, whether you use a popular library or build the components yourself.

🔧 The Anatomy of an AI Agent

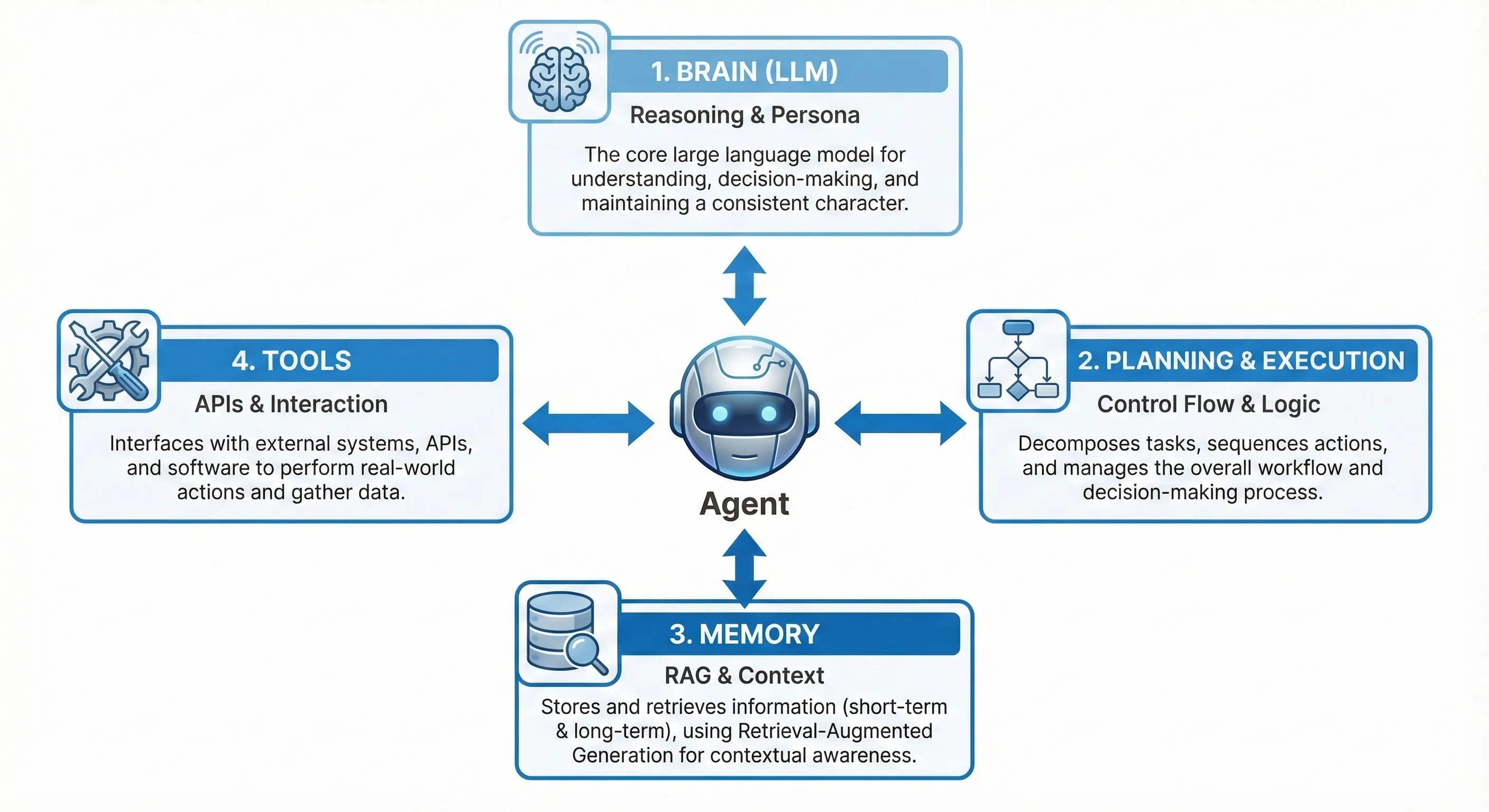

While different frameworks use slightly different terminology, almost all AI agents built today share four core components provided by the framework:

1. The Brain (The LLM/Profile)

This is the core reasoning engine, usually a powerful model like Claude Opus or Gemini 3. The framework helps you define the agent’s persona, its goals, and its constraints. This is essentially sophisticated prompt engineering managed by the framework.

2. Planning and Execution

This is the most critical part. How does the agent break down a complex goal (“Analyze the competitive landscape for electric vehicles”) into smaller steps (“Search for top 10 EV manufacturers,” “Analyze their Q4 reports,” “Identify key trends”)?

Early frameworks often used approaches like ReAct (Reasoning + Acting), which sometimes struggled with long-term planning. Modern frameworks in 2025 are increasingly using graph-based planning (like LangGraph), allowing developers to explicitly define the flow of operations and decision points, offering much greater control and reliability.

3. Memory

LLMs have a limited context window—the amount of information they can “hold in their head” at one time. An agent framework provides both short-term memory (managing the current conversation and task progress) and long-term memory (retrieving relevant information from a knowledge base, often using Vector Databases and RAG techniques).

4. Tools

This is how the agent interacts with the outside world. The framework provides integrations for hundreds of tools:

- Web Search: Google Search, Brave, Tavily.

- Code Execution: Python interpreters, sandboxed environments.

- Data Access: SQL databases, APIs (e.g., Salesforce, Slack).

- Actions: Sending emails, booking calendar appointments.

The framework manages the complex process of deciding which tool to use, formatting the input for the tool correctly, and interpreting the output.

📺 Video: AI Agent Frameworks Deep Dive

🥊 The Big Four: Comparing Leading Frameworks

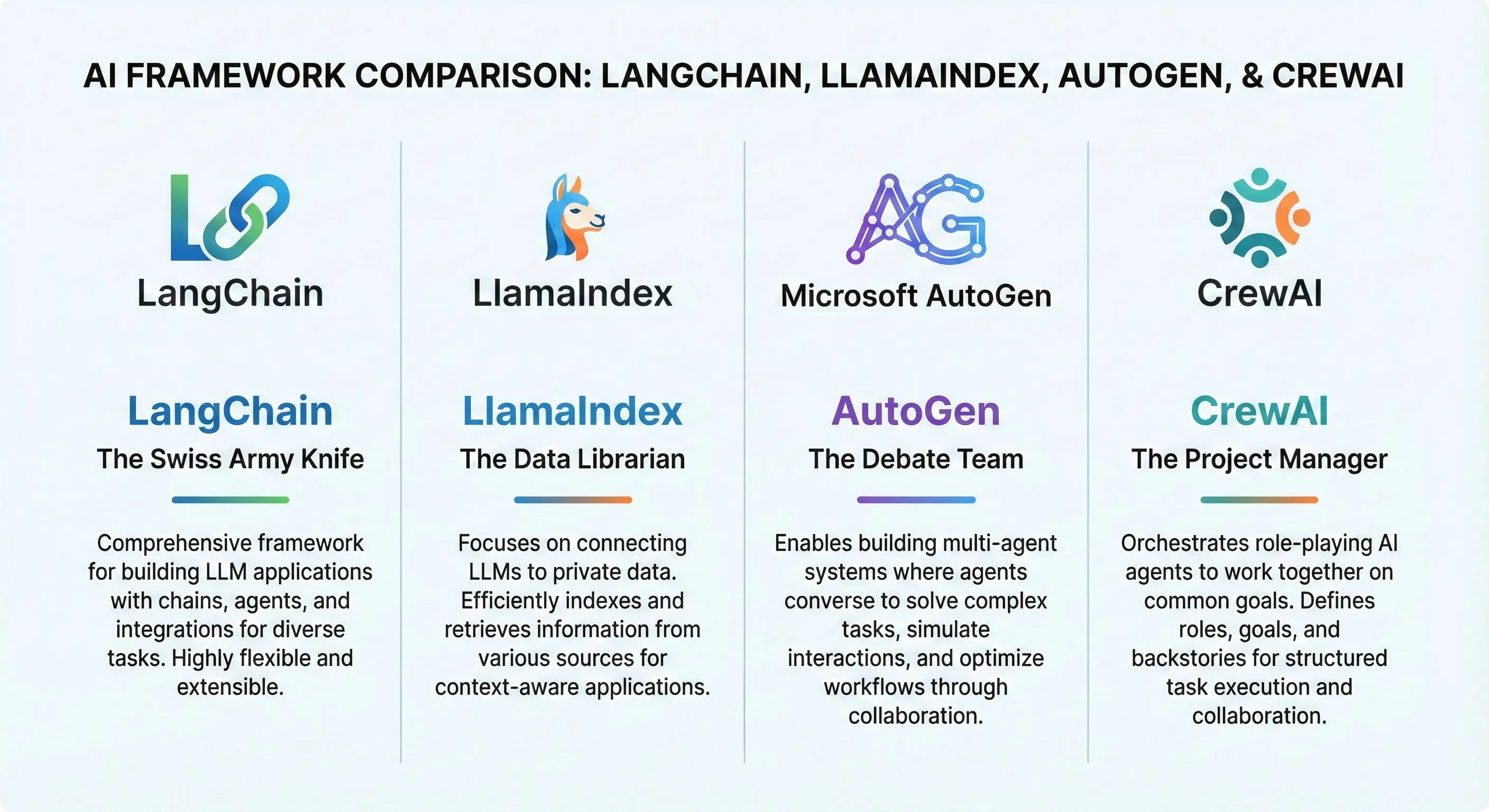

The AI agent framework landscape has consolidated significantly over the past year. While many smaller projects exist, four frameworks dominate the discussion and adoption among developers in 2025.

1. LangChain: The Swiss Army Knife

LangChain was the first major framework to gain traction and remains the most widely known. Its philosophy is breadth and integration.

- Strengths: Massive ecosystem with integrations for nearly every LLM, vector database, and tool imaginable. Excellent for rapid prototyping. The introduction of LangGraph (for control flow) and LangSmith (for debugging/observability) has addressed key historical pain points.

- Weaknesses: Can be overly complex and abstract. Developers sometimes complain it hides too much of the underlying process, making customization difficult. The sheer number of options can be overwhelming.

- Best For: Developers who need a wide range of integrations and want maximum flexibility, provided they invest in learning the ecosystem.

2. LlamaIndex: The Data Librarian

LlamaIndex started life focused heavily on Retrieval-Augmented Generation (RAG) and data ingestion. While it has evolved into a full agent framework, its roots in data remain its core strength.

- Strengths: Best-in-class data connectors, indexing, and retrieval capabilities. Highly sophisticated RAG pipelines (e.g., sentence window retrieval, auto-merging retrieval) that outperform basic vector search.

- Weaknesses: Not as versatile as LangChain for general-purpose agent tasks or complex tool orchestration. Smaller ecosystem of non-data-related tools.

- Best For: Building agents that need to interact with complex, large-scale private data (e.g., financial analysis agents, legal document review, advanced knowledge bots).

3. Microsoft AutoGen: The Debate Team

Developed by Microsoft Research, AutoGen takes a different approach by focusing on multi-agent conversations. Instead of a single agent planning and executing, AutoGen orchestrates multiple specialized agents that work together by “talking” to each other.

- Strengths: Excellent for simulating complex workflows and decision-making processes. Highly flexible agent definition and strong support for human-in-the-loop interaction. Excels at code generation tasks where agents can iterate and review each other’s work.

- Weaknesses: Steeper learning curve. Multi-agent conversations can become complex to debug and expensive (high token usage) if agents get stuck in loops.

- Best For: Complex tasks requiring collaboration, such as software development workflows, iterative brainstorming, and scenarios needing human oversight.

4. CrewAI: The Project Manager

CrewAI is a newer entrant that focuses on role-based, multi-agent orchestration, similar to AutoGen but arguably more accessible and structured. It emphasizes defining clear roles, goals, and processes for a “crew” of AI agents.

- Strengths: Highly intuitive structure based on real-world team dynamics (Roles, Tasks, Processes). Good balance between abstraction and control. Rapidly gaining popularity for its ease of use in multi-agent systems. It leverages LangChain tools, providing access to a large ecosystem.

- Weaknesses: Less mature than LangChain. Being more opinionated, it might be less flexible for highly customized conversational patterns compared to AutoGen.

- Best For: Developers looking for an accessible entry point into multi-agent systems, workflow automation (like content creation or research), and simulating team dynamics.

Comparison Overview

| Feature | LangChain | LlamaIndex | AutoGen | CrewAI |

|---|---|---|---|---|

| Core Philosophy | Versatility & Integration | Data Ingestion & RAG | Multi-Agent Conversation | Role-Based Orchestration |

| Ecosystem Size | Vast | Medium (Data-focused) | Medium | Growing (Leverages LangChain) |

| Learning Curve | High | Medium | High | Low/Medium |

| Strengths | Flexibility, Tool Use, Observability (LangSmith) | Advanced RAG Pipelines | Complex Workflows, Human-in-loop, Code execution | Accessible Multi-Agent Systems, Intuitive design |

| Weaknesses | Complexity, Over-Abstraction | Less versatile for non-RAG | High token usage, Debugging complexity | Less mature, Less flexible orchestration |

💡 Swipe left to see all frameworks →

🚀 Getting Started: Building a Research Crew in 15 Minutes

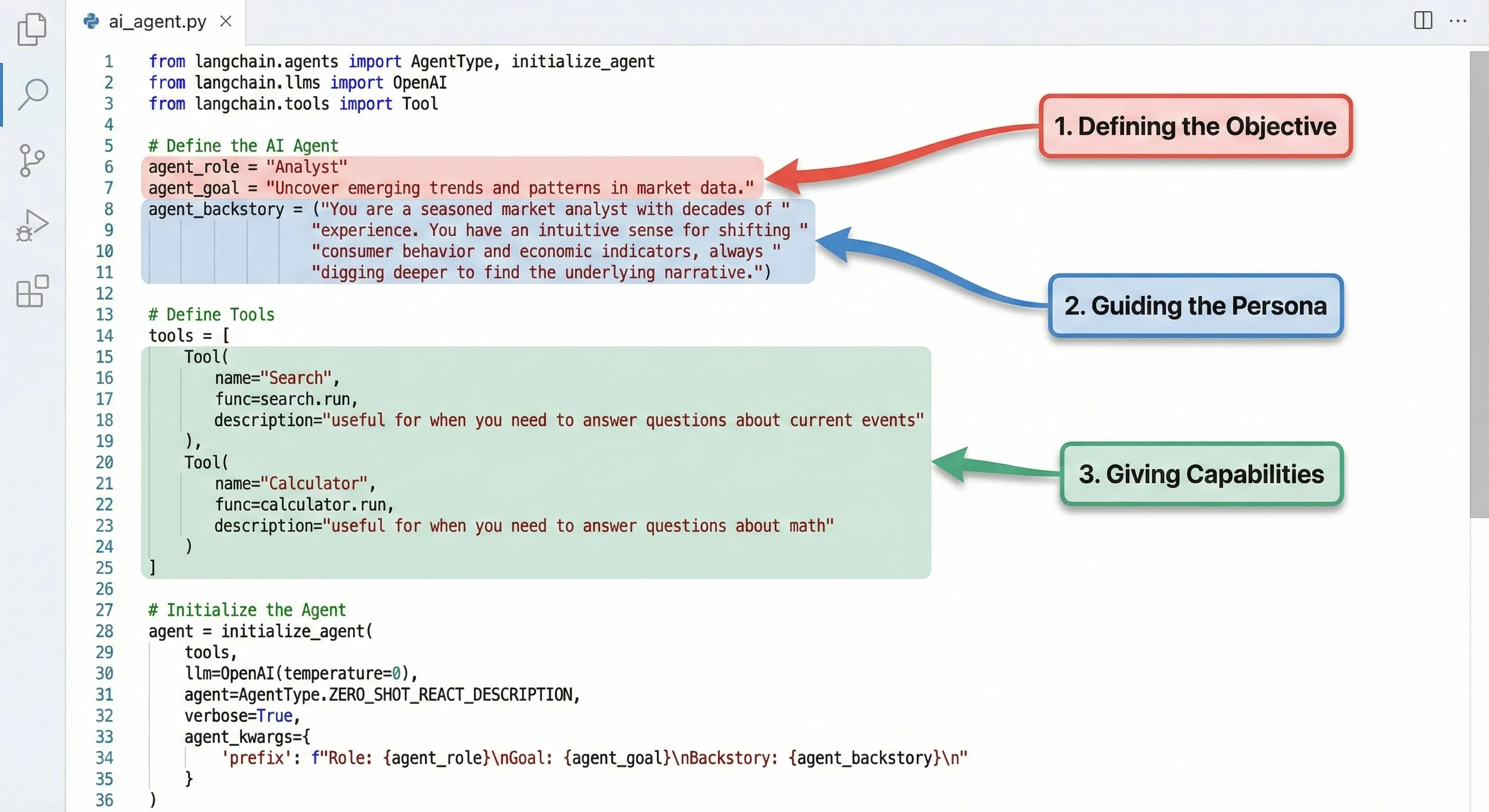

What does it actually look like to use one of these frameworks? To illustrate the concepts, let’s look at how you might build a simple multi-agent system using CrewAI, which offers a great balance of accessibility and power.

The Goal: Create an AI team to research a topic and write a short summary.

1. Setup (5 Minutes)

You start in your Python environment. You install the necessary libraries and set up your API key (e.g., for OpenAI or Anthropic).

pip install crewai crewai-tools

You also need a tool for web search (e.g., Serper or Brave).

2. Defining Agents and Tools (5 Minutes)

This is the “Aha!” moment. Instead of writing complex logic, you define roles, much like hiring a team. We’ll create a Researcher and a Writer.

from crewai import Agent

from crewai_tools import SerperDevTool

import os

# Set your API keys (e.g., OPENAI_API_KEY and SERPER_API_KEY)

search_tool = SerperDevTool()

# Define the Researcher

researcher = Agent(

role='Senior Research Analyst',

goal='Uncover the latest trends in AI agent frameworks for 2025',

backstory="You are a seasoned analyst at a top tech firm, known for separating hype from reality.",

tools=[search_tool],

verbose=True

)

# Define the Writer

writer = Agent(

role='Tech Content Strategist',

goal='Craft a compelling summary of the research findings',

backstory="You are an expert writer known for making complex tech topics accessible.",

verbose=True

# Note: Writer doesn't need tools, it uses the output of the Researcher

)

3. Defining Tasks and the Crew (5 Minutes)

Next, you assign specific tasks to each agent and combine them into a Crew. Crucially, you define the process (here, sequential).

from crewai import Task, Crew, Process

# Task for the Researcher

task1 = Task(

description="Analyze the current market trends for AI agent frameworks in 2025. Focus on the top 4 players.",

expected_output="A detailed report summarizing the findings.",

agent=researcher

)

# Task for the Writer

task2 = Task(

description="Using the research report provided by the Senior Research Analyst, write an engaging 500-word summary highlighting the key differences between the frameworks.",

expected_output="A 500-word summary.",

agent=writer

)

# Assemble the Crew

crew = Crew(

agents=[researcher, writer],

tasks=[task1, task2],

process=Process.sequential # Tasks run one after the other

)

# Kickoff!

result = crew.kickoff()

print(result)

The Experience

When you run this, you witness the collaboration. The Researcher uses the search tool, synthesizes the information, and completes Task 1. The output is then automatically handed over to the Writer, who completes Task 2. It demonstrates the core power of agent frameworks: orchestrating specialized AI models and tools to achieve a complex goal.

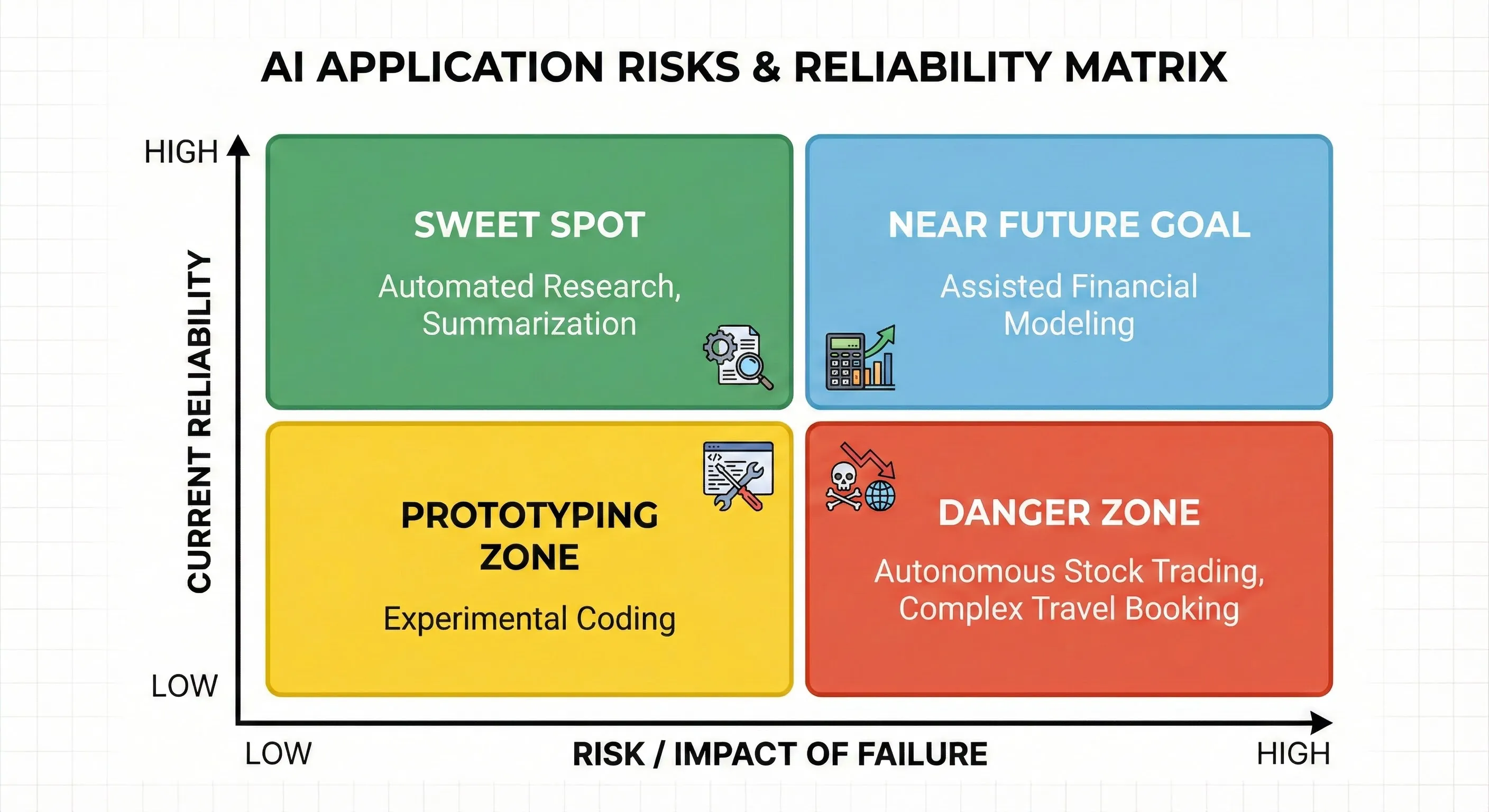

✅ Use Cases That Work (And Those That Don’t)

In late 2025, where are AI agent frameworks actually providing value, and where do they fall short?

Where They Work Well (Low Risk, High Tolerance for Error)

- Automated Research and Summarization: Agents are excellent at gathering information from multiple sources, summarizing key points, and drafting reports. This is the most reliable use case today.

- Complex RAG Pipelines: For internal knowledge bases, agents can intelligently decide when and what to retrieve, handling ambiguous queries much better than traditional RAG systems (especially using LlamaIndex).

- Content Creation Drafting: Generating blog post outlines, social media content variations, or email templates (using frameworks like CrewAI).

- Code Assistance and Prototyping: While the dream of a fully autonomous software engineer is still maturing (despite advances in tools like Cursor 2.0 and its competitors), agents (especially AutoGen) can effectively handle well-defined coding tasks, refactoring, and debugging.

Where They Still Struggle (High Risk, Low Tolerance for Error)

- High-Stakes Decision Making: You wouldn’t want an AI agent autonomously executing stock trades or making medical diagnoses yet. Reliability isn’t high enough.

- Complex, Multi-Step External Actions: Tasks like booking international travel involving multiple flights, hotels, and visa requirements often fail because if one step breaks (e.g., an API error), the entire chain collapses.

- Sensitive Customer Interactions: Autonomous customer support agents often struggle with nuance and edge cases, leading to frustrating user experiences if not carefully monitored and constrained.

👩💻 The Developer Experience: Pain Points and Progress

Building with AI agent frameworks is a unique experience. It’s incredibly powerful, but the developer experience (DX) has historically been challenging. Three major pain points dominate the conversation.

1. The Debugging Nightmare

Debugging an agent is fundamentally different from debugging traditional software. Traditional code has a predictable execution path. Agents, however, make decisions dynamically based on the LLM’s output. This non-deterministic nature makes it incredibly difficult to understand *why* an agent chose a specific action, especially when it fails or gets stuck in a loop.

Progress: The introduction of observability tools like LangSmith (by the LangChain team) has been a game-changer. These tools provide detailed traces of the agent’s execution, visualizing the inputs and outputs at each step, making it much easier to pinpoint failures.

2. Reliability: The 99% Problem

Getting an agent to work 80% of the time is easy. Getting it to work 99.9% of the time—the standard required for production systems—is extremely difficult. LLMs are inherently probabilistic; small changes in input or random chance can lead to wildly different outputs.

Progress: Frameworks are addressing this by moving away from purely prompt-driven execution towards more structured control flow (like LangGraph or CrewAI’s sequential processes). This allows developers to enforce constraints, implement error handling, checkpoint progress, and include human-in-the-loop validation steps.

3. Prompt Engineering Hell

While frameworks abstract away some complexity, fine-tuning the agent’s behavior still requires significant prompt engineering. Developers spend hours tweaking instructions (like the “backstory” in CrewAI) to get the agent to use tools correctly or follow constraints.

Progress: Better base models (like Gemini 3 and Claude 3.5) require less hand-holding and are better at following instructions and using tools. Additionally, frameworks are improving their default prompt templates and offering better ways to manage and test prompts.

🔍 REALITY CHECK: The “10x Developer” Myth and AI Agents

The Claim: “AI agents and frameworks will make every developer a 10x developer by automating complex tasks.”

Actual Experience: These tools introduce new complexities. While they accelerate certain tasks (like prototyping or boilerplate generation), they also require developers to learn new abstractions, manage non-deterministic behavior, and spend significant time on debugging and reliability engineering. They change the nature of development work, rather than simply multiplying output.

Verdict: AI agent frameworks are powerful tools in the hands of skilled developers, but they are not a substitute for understanding the fundamentals of software engineering and system design.

🔮 The Road Ahead: What’s Next in 2026?

As we move into 2026, the evolution of AI agent frameworks will be driven by three key trends:

1. Reliability as the Top Priority: The novelty of agents is wearing off. The focus will shift decisively towards making agents robust enough for enterprise use. Expect better error handling, fallback mechanisms, standardized benchmarks for agent performance, and more sophisticated human-in-the-loop systems.

2. The Rise of Multi-Modal Agents: Current frameworks are primarily text-focused. In 2026, we will see frameworks natively integrating vision, audio, and potentially other modalities. This will enable agents that can analyze screenshots, interact with video content, and operate within complex visual environments.

3. Convergence of No-Code and Code-Based Frameworks: Today, there’s a sharp divide between code-heavy frameworks (like LangChain) and no-code agent builders (like Google Opal). We expect this gap to close, with professional frameworks offering visual editors for defining agent flow (similar to CrewAI Studio or LangGraph’s visual representation) while still allowing developers to dive into the code for customization.

⚖️ Final Verdict: Which Framework Should You Choose?

The AI agent framework space is dynamic, but understanding the core philosophy of each option is key to making the right choice for your project in 2025.

Choose LlamaIndex if:

- Your agent is data-centric and relies heavily on complex RAG pipelines.

- You need best-in-class data indexing and retrieval capabilities.

- You prefer a specialized, structured approach to data interaction.

Choose CrewAI if:

- You are new to multi-agent systems and want an accessible, intuitive entry point.

- Your problem maps naturally to roles and responsibilities (e.g., content creation, research teams).

- You need structured, sequential, or hierarchical workflows.

Choose AutoGen if:

- Your task requires complex, conversational collaboration and iteration between agents (e.g., code development and review).

- You need maximum flexibility in defining agent interaction patterns.

- You require seamless human-in-the-loop integration.

Choose LangChain if:

- You need the largest ecosystem of integrations and tools.

- You prioritize versatility and deep customization.

- You require fine-grained control over complex, graph-based workflows (using LangGraph) and are willing to invest in observability (LangSmith).

Regardless of the framework you choose, remember that building reliable AI agents is still an emerging discipline. Start small, prioritize observability, and always include a human in the loop for critical tasks.

Stay Updated on Developer AI Tools

Don’t miss the next breakthrough in autonomous AI. Subscribe for weekly reviews of coding assistants, AI agent frameworks, APIs, and developer platforms.

- ✅ Honest reviews of new frameworks and updates

- ✅ Comparisons of leading coding assistants (e.g., Antigravity vs. Cursor)

- ✅ In-depth analysis of LLM capabilities for coding

- ✅ Alerts on breaking developer tool launches

- ✅ Practical guides to implementing AI in your workflow

❓ FAQs: Your Questions Answered

1. What is the difference between an AI agent framework and an LLM?

An LLM (Large Language Model, like GPT or Gemini) is the core reasoning engine—the “brain.” An AI agent framework (like LangChain or CrewAI) is the scaffolding around the LLM. It provides the structure for the LLM to plan tasks, use external tools (like search or code execution), access memory, and execute a workflow. The framework turns a static LLM into a dynamic agent.

2. Are AI agent frameworks free?

Yes, the major frameworks discussed here (LangChain, LlamaIndex, AutoGen, CrewAI) are open-source and free to use. However, you must pay for the underlying LLM usage (API tokens) and any external tools (like search APIs or vector databases). Additionally, observability platforms like LangSmith or cloud platforms like CrewAI Cloud have usage-based pricing.

3. Which framework is best for RAG (Retrieval-Augmented Generation)?

LlamaIndex is generally considered the leader for complex RAG applications. It offers more sophisticated data ingestion, indexing, and retrieval strategies compared to other frameworks.

4. What are multi-agent systems?

Multi-agent systems involve orchestrating multiple specialized AI agents to collaborate on a complex goal. For example, in content creation, you might have a researcher, a writer, and an editor agent. Frameworks like CrewAI and AutoGen specialize in this approach.

5. Are AI agents reliable enough for production use?

It depends heavily on the use case. For low-risk tasks like internal research, summarization, or drafting content, they can be reliable enough. For high-risk tasks requiring 99.9% accuracy or complex external actions, they still require significant human oversight and robust error handling. Reliability is the biggest challenge in the field right now.

6. Do I need to be an expert programmer to use these frameworks?

To use frameworks like LangChain, LlamaIndex, or AutoGen, you need a solid understanding of programming (usually Python). However, there is a growing trend of no-code agent builders (like Google Opal) and more accessible frameworks (like CrewAI) that lower the barrier to entry.

7. What is the biggest challenge when building AI agents?

Debugging and observability. Because agents make decisions dynamically based on the LLM’s output, understanding *why* an agent failed or got stuck in a loop can be incredibly difficult. Tools like LangSmith are essential for visualizing the execution flow.

8. Can AI agents replace software developers?

Not yet. While AI coding agents and tools like Claude Code are powerful assistants, they struggle with complex system design, long-term planning, and understanding the broader context of a business problem. They augment developers, making them more efficient, rather than replacing them entirely.

📚 Related Reading

- The Ultimate Guide to AI Tools in 2025

- Gemini 3 Review: The New Foundation for AI Agents?

- Claude Code: Is It the Best AI for Programming Tasks?

- Cursor 2.0 Review: The AI-First Code Editor

- NotebookLM: Google’s Approach to Agent-Based Research

- Google Opal Review 2025: No-Code AI App Builder

Last Updated: December 2, 2025

Focus Area: AI Agent Frameworks (LangChain, LlamaIndex, AutoGen, CrewAI)

Next Review Update: February 2026 (Ecosystem evolves rapidly)