The Bottom Line

If you remember nothing else from this DeepSeek R1 review: This is the AI chatbot that made Nvidia’s stock drop 17% in a single day. It matches ChatGPT’s o1 reasoning model on math and coding benchmarks while costing 96% less to use via API ($0.55 vs $15 per million input tokens). The web interface is completely free with no usage limits.

The catch? Your data goes to servers in China, the model censors topics sensitive to the Chinese government, and you can’t generate images or use voice features. If you care about privacy or need a full-featured assistant, stick with ChatGPT 5.2. If you want maximum reasoning power at minimum cost and you’re working on non-sensitive projects, DeepSeek R1 is genuinely impressive.

Best for: Developers, students, researchers working on coding, math, and logic problems. Skip if: You handle sensitive business data, need image generation, or want a conversational assistant.

Too Long; Didn’t Read?

- ✓ Massive Savings: 96% cheaper than OpenAI’s o1 model ($0.55 vs $15).

- ✓ Coding Powerhouse: Matches top models for math and coding tasks.

- ✓ Open Source: Can be run locally to bypass privacy concerns.

- ⚠️ Privacy Risk: Data goes to Chinese servers if using the web interface.

Click any section to jump directly to it

- 🤖 What DeepSeek R1 Actually Does

- 💥 The “$6 Million Sputnik Moment” Explained

- 🚀 Getting Started: Your First 10 Minutes

- ⚡ Features That Actually Matter

- 🥊 DeepSeek R1 vs ChatGPT o1: Real Tests

- 🔒 The Privacy Problem: What You Need to Know

- 🚫 Chinese Censorship: How Bad Is It?

- 💰 Pricing Breakdown: The Real Costs

- 👤 Who Should Use DeepSeek R1 (And Who Shouldn’t)

- 💬 What Reddit and Developers Actually Think

- ❓ FAQs: Your Questions Answered

- ⚖️ Final Verdict

What DeepSeek R1 Actually Does (Not What the Headlines Say)

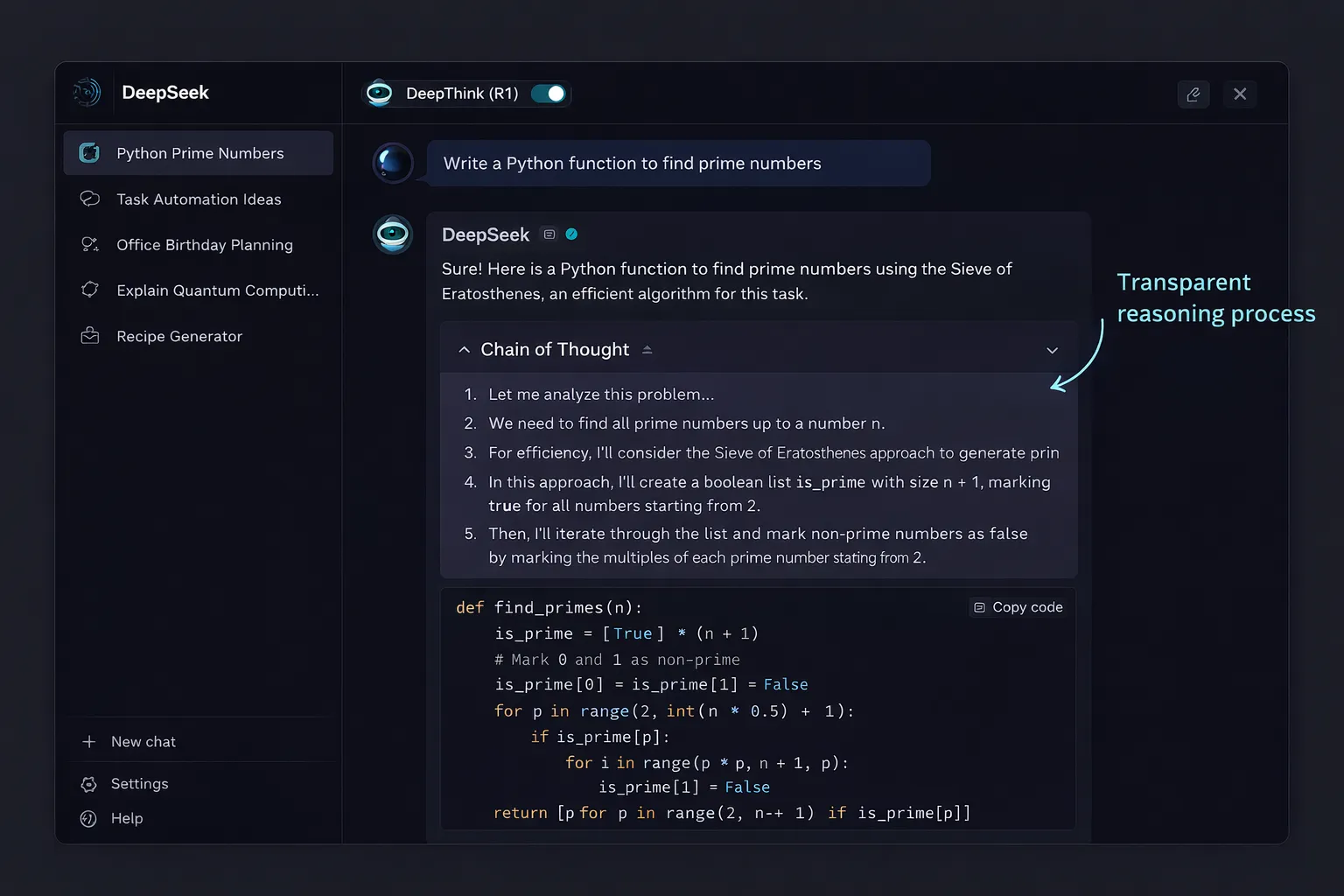

DeepSeek R1 is a “reasoning model” from Chinese AI startup DeepSeek. What does that mean in plain English? Unlike standard chatbots that guess the next word, R1 actually thinks before it speaks. You can watch it work through problems step by step, showing its “chain of thought” before giving you an answer.

Think of it like the difference between a student who blurts out answers versus one who shows their work on a math test. DeepSeek R1 shows its work. Every time.

Here’s what it can actually do:

- Solve complex math problems: Scored 79.8% on the American Invitational Mathematics Examination (AIME), matching OpenAI’s o1

- Write and debug code: Achieved 2,029 Elo on Codeforces-style challenges

- Reason through logic puzzles: Shows transparent step-by-step reasoning you can follow

- Analyze documents: 128K token context window handles long documents well

Here’s what it cannot do:

- Generate images: Text only, no DALL-E equivalent

- Voice conversations: No speech input or output

- Real-time web browsing: Limited compared to Perplexity AI

- Discuss certain topics: Censored on Chinese political content (more on this below)

🔍 REALITY CHECK

Marketing Claims: “Performance on par with OpenAI-o1 across math, code, and reasoning tasks.”

Actual Experience: Mostly true for math and coding. For general knowledge and creative writing, ChatGPT and Claude feel more polished. The reasoning transparency is genuinely useful for debugging logic.

Verdict: Impressive for specific tasks, not a general replacement.

The “$6 Million Sputnik Moment” Explained

In January 2025, DeepSeek R1 became the #1 downloaded free app on Apple’s App Store in the US, overtaking ChatGPT. Nvidia’s stock crashed 17% in one day, wiping out $593 billion in market value. Tech journalists called it a “Sputnik moment” for American AI.

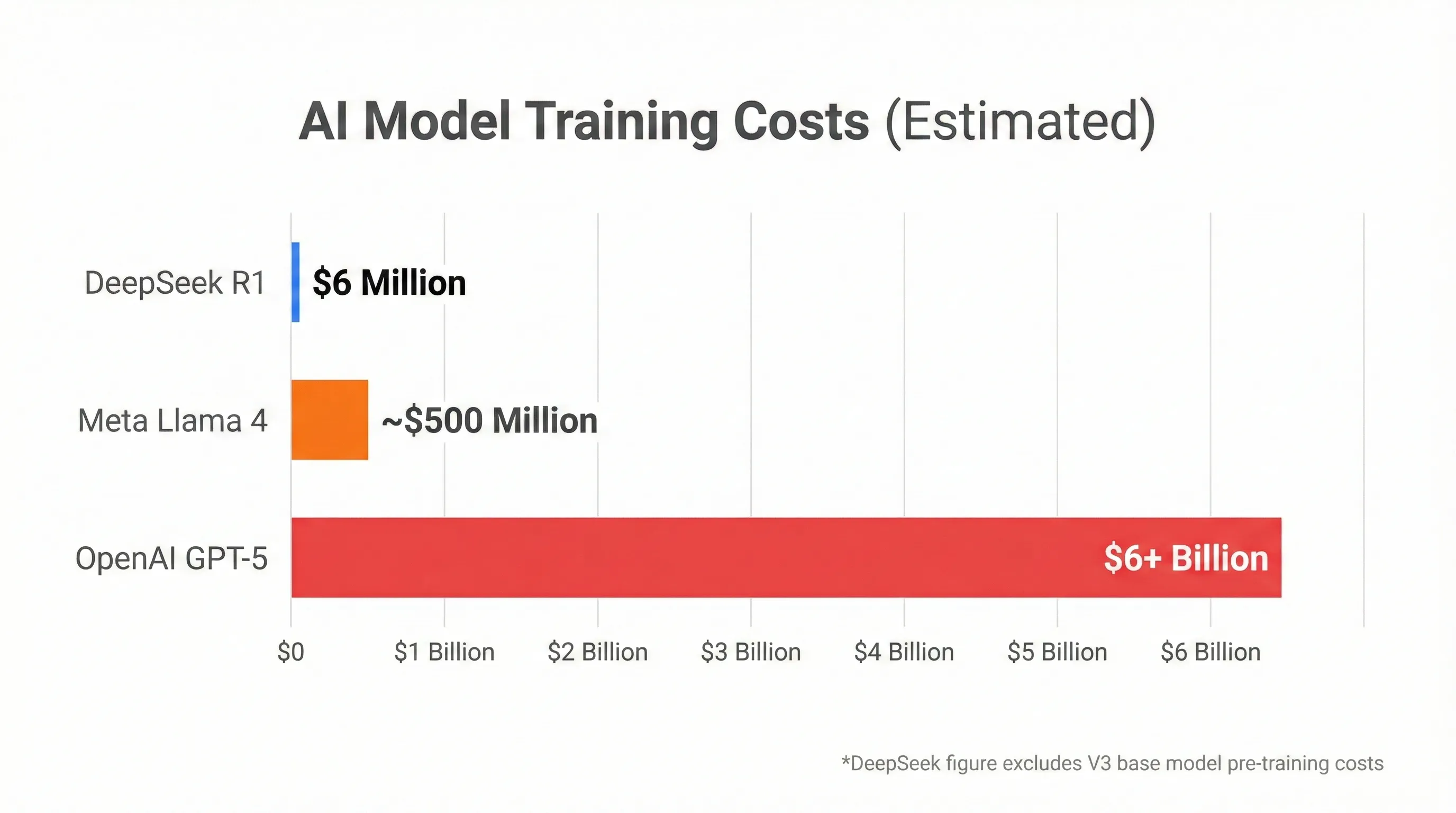

Why all the drama? Because DeepSeek claimed to train R1 for just $5.58 million, using roughly 2,000 Nvidia GPUs. Meanwhile, OpenAI allegedly spent over $6 billion on GPT-5 development. The message was clear: You don’t need billions to build frontier AI.

Is the $6 million figure accurate? Probably not the full picture. DeepSeek R1 builds on their V3 base model, which cost additional millions to train. But even the most skeptical estimates put the total at 1/10th to 1/50th of what American labs spend. That’s still a massive efficiency gap.

The technical secret sauce? DeepSeek uses a “Mixture of Experts” (MoE) architecture. The model has 671 billion total parameters, but only 37 billion activate for any single query. Think of it like a company with 100 specialists: instead of paying all 100 for every task, you only call in the 5-6 experts needed for that specific problem.

Sam Altman responded on X: “deepseek’s r1 is an impressive model… we will obviously deliver much better models and also it’s legit invigorating to have a new competitor!”

Getting Started: Your First 10 Minutes with DeepSeek R1

Here’s exactly what happened when I signed up:

Minute 1-2: Went to chat.deepseek.com. Created an account with Google login (you can also use phone number or email). No credit card required.

Minute 3-4: Interface looks almost identical to ChatGPT. There’s a toggle between “DeepSeek” (fast, general) and “DeepThink (R1)” (slower, reasoning mode). I selected DeepThink.

Minute 5-7: Asked it to solve a coding problem: “Write a Python script that finds all prime numbers between 1 and 1000, optimized for speed, with comments explaining the algorithm.”

The magic happened here. Before answering, DeepSeek R1 showed me its thinking process in a collapsible “Chain of Thought” section. It considered multiple approaches (brute force, Sieve of Eratosthenes), evaluated trade-offs, then wrote optimized code with clear comments.

Minute 8-10: Tested the code. It worked perfectly on first try. The Sieve implementation was textbook-correct and ran in 0.02 seconds.

Total time to useful output: Under 10 minutes, including account creation. The experience was smoother than I expected for a free tool.

🔍 REALITY CHECK

Marketing Claims: “Free access to the main model without limitation on queries.”

Actual Experience: True, but with caveats. During peak hours (US evening times), I got “Server busy” errors multiple times. The web search feature was often unavailable. The core chat worked, but not always instantly.

Verdict: Free and genuinely useful, but plan for occasional downtime.

Features That Actually Matter (And Three That Don’t)

Features Worth Your Attention

1. Transparent Chain-of-Thought Reasoning

This is the killer feature. When you ask DeepSeek R1 a complex question, it shows you exactly how it thinks. I asked: “Why does the Monty Hall problem have a counterintuitive solution?” The model walked through the probability tree, explained common misconceptions, and even identified where human intuition goes wrong. You don’t just get an answer; you get an education.

2. Open-Source Weights (MIT License)

Unlike ChatGPT or Claude, you can download DeepSeek R1 and run it locally. For developers, this is huge. You can fine-tune it on your data, deploy it on your servers, and avoid sending sensitive information to China. The “distilled” smaller versions (1.5B to 70B parameters) run on consumer hardware.

3. API Pricing That Makes You Double-Check

$0.55 per million input tokens. $2.19 per million output tokens. For comparison, OpenAI’s o1 charges $15 input and $60 output. That’s 27x cheaper for input and 27x cheaper for output. For high-volume applications, this changes the economics completely.

Features That Don’t Matter (For Most Users)

1. The 671 Billion Parameter Count

Marketing loves big numbers. But since only 37B activate per query, the “671B” figure is misleading. Judge by output quality, not parameter count.

2. “Beating” OpenAI on Benchmarks

DeepSeek matches o1 on MATH-500 (90.2% vs 96.4%) and MMLU (90.8% vs 91.8%). These marginal differences don’t translate to noticeably different user experiences. Both feel smart.

3. Web Search Feature

DeepSeek has web search, but it’s unreliable and often disabled during peak times. If you need current information, Perplexity AI is far superior.

DeepSeek R1 vs ChatGPT o1: Real Tests (Not Marketing)

I ran the same tasks through both models. Here’s what happened:

| Test | DeepSeek R1 | ChatGPT o1 | Winner |

|---|---|---|---|

| Code: Async Python refactor | 12 seconds thinking, caught a race condition I missed | 8 seconds, correct but missed the edge case | DeepSeek R1 |

| Math: Complex calculus problem | Correct answer, showed all steps | Correct answer, cleaner formatting | Tie |

| Logic: Modified Trolley Problem | Missed the twist (dead people already dead) | Caught the twist immediately | ChatGPT o1 |

| Creative: Write a product email | Functional but robotic | Engaging and persuasive | ChatGPT o1 |

| Speed (average response) | 28 seconds | 15 seconds | ChatGPT o1 |

| Price (1M output tokens) | $2.19 | $60.00 | DeepSeek R1 |

💡 Swipe left to see all features →

The Verdict: DeepSeek R1 wins on coding and price. ChatGPT o1 wins on creative tasks, speed, and catching subtle logical tricks. For pure engineering work, DeepSeek is the better value. For anything involving nuance or polish, ChatGPT justifies its price.

For a deeper comparison with DeepSeek’s general-purpose model, see our DeepSeek V3.2 vs ChatGPT-5 analysis.

The Privacy Problem: What You Need to Know

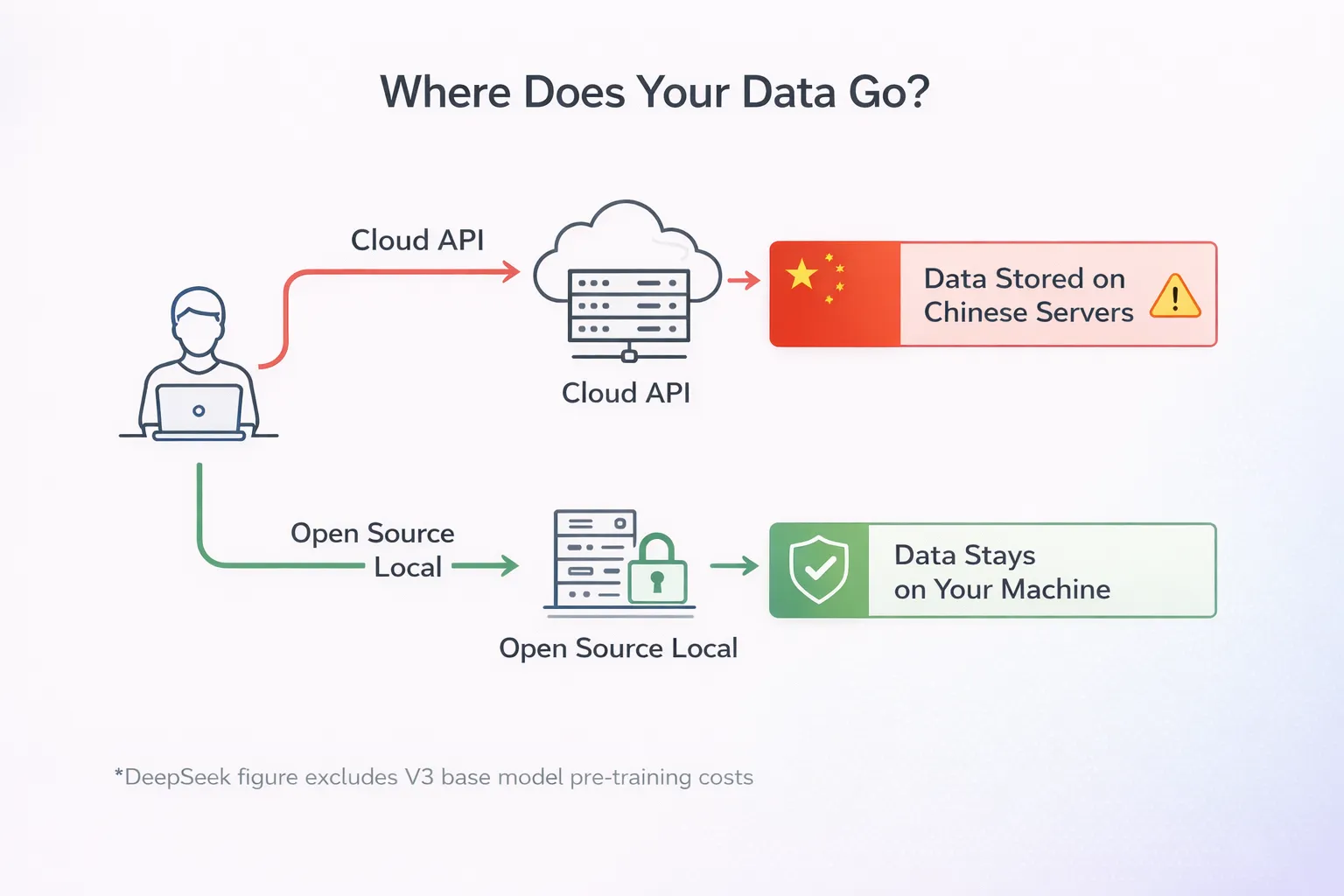

Let’s be direct: when you use chat.deepseek.com, your data goes to servers in China. DeepSeek’s privacy policy explicitly states this.

What they collect:

- Your text inputs and conversation history

- Device information and IP address

- “Keystroke patterns or rhythms” (yes, really)

- Usage analytics and performance data

Under Chinese law, companies must cooperate with government data requests. There’s no equivalent to GDPR protection. Italy has already banned DeepSeek over data privacy concerns. Australia’s science minister raised similar alarms.

The Workaround: Download the open-source model and run it locally. This keeps your data on your machine. Several platforms (AWS, Azure, Together.ai) offer hosted versions that don’t route through Chinese servers. Perplexity AI created “R1 1776,” an uncensored version hosted on US infrastructure.

My recommendation: Use the web interface for non-sensitive tasks (learning, coding tutorials, general questions). For anything involving business strategy, personal information, or competitive intelligence, use local deployment or a US-hosted alternative.

🔍 REALITY CHECK

Marketing Claims: DeepSeek doesn’t directly address data concerns in marketing.

Actual Experience: The privacy policy is clear about Chinese server storage. This isn’t hidden, but it’s not prominently displayed either. Most users clicking “Accept” have no idea.

Verdict: Serious concern for professional use. Fine for casual experimentation.

Chinese Censorship: How Bad Is It?

I asked DeepSeek R1 about Tiananmen Square. Here’s what happened: The model started generating a response about the 1989 protests, including details about the military crackdown. Then, mid-response, it deleted everything and replaced it with: “Sorry, that’s beyond my current scope. Let’s chat about math, coding and logic problems instead.”

This pattern repeats for questions about:

- Taiwan independence

- Uyghur treatment in Xinjiang

- Hong Kong protests

- Comparisons of Xi Jinping to Winnie the Pooh

- Falun Gong

Security researchers at Promptfoo tested 1,156 politically sensitive prompts. The censorship is “brute force,” they concluded, crude but comprehensive on anything relating to Chinese Communist Party policy.

The Interesting Part: The censorship only triggers on explicitly Chinese topics. Ask about American political controversies, North Korean human rights, or Russian authoritarian practices, and R1 answers freely. It’s clearly a compliance layer added on top, not inherent to the model’s capabilities.

Workarounds: Users have found success by omitting China-specific context, framing questions as hypotheticals, or using the open-source version locally with the censorship removed. Perplexity’s R1 1776 has been fine-tuned to remove these restrictions entirely.

Pricing Breakdown: The Real Costs

DeepSeek R1’s pricing is its most disruptive feature:

| Usage Type | DeepSeek R1 | OpenAI o1 | Savings |

|---|---|---|---|

| Web Chat | Free, unlimited | $20/month (ChatGPT Plus) or $200/month (Pro) | $240-2,400/year |

| API Input | $0.55/1M tokens | $15.00/1M tokens | 96% cheaper |

| API Output | $2.19/1M tokens | $60.00/1M tokens | 96% cheaper |

| Cache Hit (Repeated Context) | $0.14/1M tokens | Not offered | Even bigger savings |

💡 Swipe left to see all features →

Real-World Example: A startup processing 10 million tokens/day for customer support:

- OpenAI o1: ~$750/day = $22,500/month

- DeepSeek R1: ~$28/day = $840/month

- Monthly savings: $21,660

This is why enterprise developers are paying attention, privacy concerns aside.

Hidden Costs: If you run DeepSeek locally, you need serious hardware. The full 671B model requires 8x NVIDIA H200 GPUs ($32,000+ per GPU). The distilled 32B version runs on consumer GPUs but with reduced capability. For most users, the free web interface or API is the practical choice.

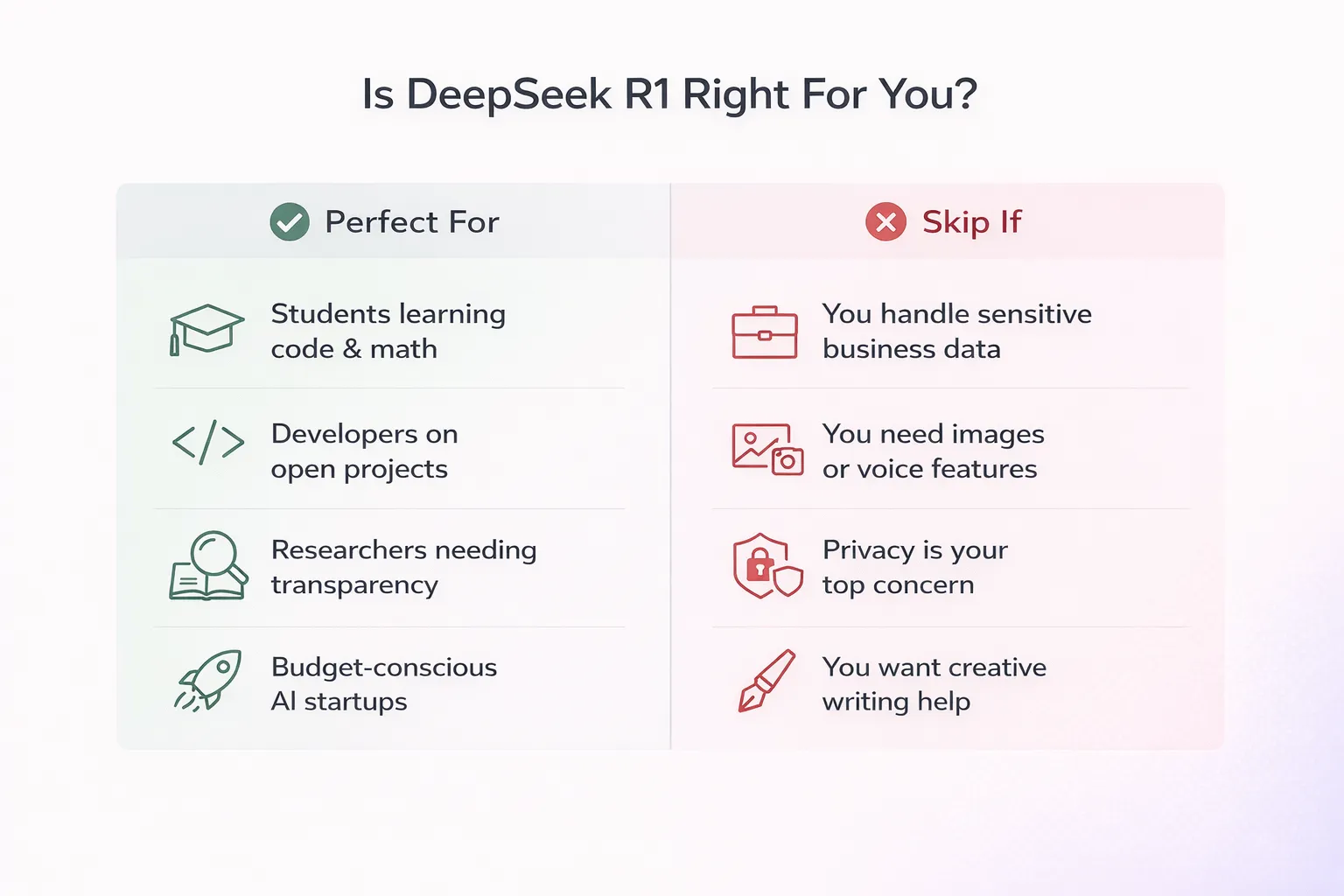

Who Should Use DeepSeek R1 (And Who Shouldn’t)

✅ DeepSeek R1 Is Perfect For:

1. Students Learning to Code or Do Math

The transparent reasoning is incredible for education. You don’t just get answers; you understand the process. And it’s free, which matters when you’re broke.

2. Developers Working on Non-Sensitive Projects

For open-source work, personal projects, or anything you’d share publicly anyway, DeepSeek R1’s coding ability is world-class. The API pricing makes prototyping affordable.

3. Researchers Who Need Transparent Reasoning

Academic work often requires showing methodology. DeepSeek’s chain-of-thought output is citable evidence of reasoning, not just conclusions.

4. Budget-Conscious Startups Building AI Products

At 27x cheaper than OpenAI, DeepSeek changes unit economics for AI-powered products. Just be transparent with users about data handling.

⌠Skip DeepSeek R1 If:

1. You Handle Sensitive Business or Personal Data

Don’t put customer data, financial records, HR documents, or competitive intelligence through Chinese servers. Period.

2. You Need Image Generation or Voice

DeepSeek R1 is text-only. For multimodal work, ChatGPT 5.2 or Gemini 3 are your options.

3. You Want a Conversational Assistant

R1 is optimized for reasoning, not chat. For everyday questions, creative writing, or casual conversation, ChatGPT feels more natural.

4. Your Work Involves Chinese-Sensitive Topics

If you’re a journalist, researcher, or activist working on topics the Chinese government considers sensitive, don’t use DeepSeek. The censorship is real, and the data collection is concerning.

What Reddit and Developers Actually Think

I spent hours reading r/artificial, r/ChatGPT, r/OpenAI, and developer forums. Here’s the unfiltered consensus:

The Positive Takes

“For pure coding, DeepSeek is insane. It caught a bug in my production code that I’d missed for weeks.”— r/ChatGPT user

“I cancelled my ChatGPT Plus subscription. For my use case (learning algorithms), DeepSeek is actually better.”— r/artificial user

“The reasoning transparency alone is worth switching. I finally understand HOW these models think.”— Developer on Hacker News

The Negative Takes

“Great model, terrible ethics. I’m not comfortable sending my data to Beijing.”— r/OpenAI user

“It goes off the rails in longer sessions. Had to restart conversations multiple times.”— r/artificial user

“The censorship is annoying even if you don’t care about Chinese politics. It refused to help me with a historical fiction novel set in Taiwan.”— Writer on r/ChatGPT

The Pattern

Developers love it. Privacy-conscious users hate it. Casual users find it capable but “robotic” compared to ChatGPT. The consensus: use it for technical work, avoid it for sensitive applications.

FAQs: Your Questions Answered

Q: Is DeepSeek R1 really free?

A: Yes, the web interface at chat.deepseek.com is completely free with no usage limits. The API has pay-as-you-go pricing starting at $0.55 per million input tokens, significantly cheaper than competitors.

Q: Is DeepSeek R1 safe to use?

A: For non-sensitive tasks, it’s as safe as any AI chatbot. For business or personal sensitive data, there are legitimate concerns since data is stored on servers in China. Run the open-source version locally or use US-hosted alternatives for sensitive work.

Q: How does DeepSeek R1 compare to ChatGPT?

A: DeepSeek R1 matches or exceeds ChatGPT o1 on math and coding benchmarks while being 96% cheaper. ChatGPT is better for creative writing, casual conversation, and multimodal tasks (images, voice). DeepSeek has censorship on Chinese political topics; ChatGPT does not.

Q: Can I run DeepSeek R1 locally?

A: Yes, DeepSeek R1 is open-source under MIT license. The full 671B model needs expensive hardware (8x H200 GPUs), but distilled versions (1.5B to 70B parameters) run on consumer GPUs. Ollama makes local deployment relatively simple.

Q: What is DeepSeek R1’s censorship like?

A: R1 refuses to discuss topics sensitive to the Chinese government: Tiananmen Square, Taiwan independence, Uyghur treatment, Hong Kong protests, and criticism of CCP leadership. The censorship is “brute force” and can be bypassed by running the open-source model locally.

Q: When is DeepSeek R2 coming?

A: R2 was expected in mid-2025 but was delayed after founder Liang Wenfeng expressed dissatisfaction with performance. In January 2026, DeepSeek published new training research that analysts believe will be implemented in either R2 or V4. No official release date has been announced.

Q: Does DeepSeek R1 have a mobile app?

A: Yes, DeepSeek has iOS and Android apps that reached #1 on App Store charts in January 2025. The apps offer the same functionality as the web interface, including DeepThink (R1) reasoning mode.

Q: Is DeepSeek R1 better than Claude for coding?

A: It depends on the task. DeepSeek R1 excels at pure algorithmic problems and shows transparent reasoning. Claude Opus 4.5 holds the SWE-bench record (80.9%) for real-world software engineering tasks. For learning and debugging, DeepSeek’s transparency is valuable. For production code, Claude’s reliability edge matters.

Final Verdict: Should You Use DeepSeek R1?

Final Verdict: 4.5/5

DeepSeek R1 is a genuinely impressive achievement that deserves attention, if not unconditional trust.

✅ The Good

- ✓ World-class reasoning at rock-bottom prices

- ✓ Transparent “Chain of Thought”

- ✓ Open Source (run it locally!)

⌠The Bad

- ✘ Privacy Policy (Chinese servers)

- ✘ Censorship on sensitive topics

- ✘ Potential downtime

Avoid for: Sensitive Data, Politics, Creative Writing

Try it today: chat.deepseek.com (free, no credit card required)

Stay Updated on AI Chatbot Tools

The AI chatbot race is moving fast. Don’t miss the next breakthrough. Subscribe for weekly reviews of reasoning models, coding assistants, and the AI tools that actually matter.

- ✅ Honest reviews of tools like DeepSeek, ChatGPT, Claude, and Gemini

- ✅ Price drop alerts when premium features go free

- ✅ Privacy warnings before you share data with the wrong tools

- ✅ Weekly AI news without the hype

- ✅ Real testing, not press release rewrites

Free, unsubscribe anytime

Related Reading

AI Chatbot Reviews

- ChatGPT 5.2 Review: The ‘Code Red’ Response To Gemini 3

- Claude Opus 4.5 vs Gemini 3.0: Which Model Wins?

- Perplexity AI Review: The Free AI Search Engine

- Kimi K2 Thinking Review: The Free Reasoning Agent

DeepSeek Comparisons

AI Coding Tools

- Claude Code Review: The Reality After Claude Opus 4.5

- Cursor 2.0 Review: The $9.9B AI Code Editor

- Best AI Developer Tools 2025

AI News

Last Updated: January 4, 2026

DeepSeek R1 Version Tested: R1-0528

Next Review Update: February 4, 2026 (or sooner if R2 launches)