Welcome to Our Seedance Review

⚡ TL;DR – The Bottom Line

🎬 What it is: ByteDance’s AI video generator with multimodal reference input — upload images, videos, and audio, then direct the AI using @ commands.

💰 Price: Starting at ~$9.60/month on Dreamina vs Sora 2’s $200/month — with a free tier and $0.14 trial option.

🎯 Best for: Creators who need character consistency, beat-synced music videos, and reference-heavy workflows.

🔊 Key strength: Native audio-visual sync with lip-sync in 8+ languages and 90%+ usable output rate.

✅ Verdict: The most controllable AI video tool available — a genuine paradigm shift from “type and pray” to “show and direct.”

⚠️ The catch: Limited to 15-second clips, primarily accessible through Chinese platforms, and international rollout is still pending.

Seedance 2.0 is ByteDance’s answer to Sora 2 and Veo 3.1, and it may have just leapfrogged both. Think of it as having a film director living inside your browser who actually listens to your instructions. You upload reference photos, videos, and audio, then tell the AI exactly how to use each one. The result? Cinema-quality clips with synchronized audio at a fraction of what competitors charge. Dreamina plans start at roughly $9.60/month versus Sora 2’s $200/month entry point. The catch? It’s currently in limited beta, primarily accessible through Chinese platforms like Jimeng (Dreamina), and international access is still rolling out. Best for content creators who need character consistency and multimodal control. Skip if you need videos longer than 15 seconds in a single generation or if you require an English-language community ecosystem. For a broader look at the AI video landscape, check our VEO 3.1 and Sora 2 comparison.

📑 Quick Navigation

🚀 What Seedance 2.0 Actually Does (And Why It’s Going Viral)

Here’s the simplest explanation: Seedance 2.0 is an AI video maker that copies what you show it instead of guessing from words alone. Most AI video generators work like this: you type a description, cross your fingers, and hope the AI interprets “woman in red dress dancing” the way you imagined. With Seedance 2.0, you upload the actual red dress photo, a reference dance video, and even a music track, then the AI combines them precisely.

Developed by ByteDance’s Seed Research Lab (yes, the company behind TikTok), Seedance 2.0 launched on February 10, 2026, as a limited beta on the Jimeng AI platform. Within 48 hours, demo videos had millions of views on Chinese social media, AI stocks surged, and industry veterans started calling it a “singularity moment” for video creation.

The core technology shift is what ByteDance calls “dual-branch diffusion transformer architecture.” In plain English? The AI generates video and audio simultaneously, not separately. When a character speaks in a Seedance 2.0 video, their lips actually match the words. When a door slams, you hear it at the exact right moment. Previous tools generated silent video, forcing you to add audio in post-production using tools like ElevenLabs or manual editing in DaVinci Resolve.

🔍 REALITY CHECK

Marketing Claims: “Director-level creative control over every aspect of your video generation process”

Actual Experience: The @ reference system genuinely delivers more control than any competitor. You can specify which uploaded image defines the character, which video sets the camera movement, and which audio drives the rhythm. However, “director-level” is a stretch. You’re still limited to 15-second clips and can’t control every individual frame.

✅ Verdict: More like “assistant director” level, but that’s still leagues ahead of the competition.

📰 Breaking News: The Launch That Shook Markets

Seedance 2.0 didn’t just launch. It went nuclear. According to PYMNTS, ByteDance officially released Seedance 2.0 on February 12, positioning it for professional film, eCommerce, and advertising production. The launch immediately went viral in China, with Reuters comparing the buzz to last year’s DeepSeek moment.

The financial impact was immediate. Chinese AI media stocks surged 7-20%, with companies like Huace Media Group and Perfect World seeing significant gains. The reaction wasn’t just hype. Creators on Weibo shared short films and narrative clips generated from simple prompts, with some videos drawing millions of views. One tester described it as producing footage that was virtually indistinguishable from real video.

The timing matters. This launch puts ByteDance into direct competition with OpenAI (Sora 2), Google (Veo 3.1), and Kuaishou (Kling 3.0) in the most competitive period the AI video industry has ever seen. If you’ve been tracking the Kling AI evolution, you know how fast this space moves.

⚙️ How the Seedance Review Workflow Actually Feels

Traditional AI video tools work like shouting instructions to someone wearing earplugs. You describe what you want, the AI takes a guess, and you roll the dice. Seedance 2.0 flips this entirely by introducing what ByteDance calls “multimodal all-round reference.” Think of it as handing your AI assistant a mood board, a choreography video, and a soundtrack, then saying “make something exactly like this.”

The system accepts up to 12 reference files simultaneously across four input types. You can upload up to 9 images, 3 videos (15 seconds total), and 3 audio files (MP3, 15 seconds total), plus text prompts. Each file is assigned a specific role using the @ symbol in your prompt:

Example prompt structure: “Use @Image1 as the main character. Copy the camera movement from @Video1. Match the rhythm and pacing of @Audio1. Set the scene in @Image2’s environment. Style: cinematic, warm lighting.”

The AI doesn’t just understand what each file contains. It understands the role you’ve assigned to it. An uploaded dance video becomes a choreography template. A product photo becomes the visual reference. A music track becomes the pacing guide. This is fundamentally different from tools like Runway Gen-4, where you generate each clip independently and hope the style stays consistent.

Another standout feature is automatic multi-shot storytelling. Most AI video tools give you one continuous shot per generation. Seedance 2.0 automatically cuts between multiple camera angles within a single generation, parsing your narrative description into wide shots, close-ups, and medium shots without you having to specify each one individually.

🎯 Features That Actually Matter (Tested)

Native Audio-Visual Synchronization. This is the headline feature. Seedance 2.0 generates audio alongside video in one pass, including dialogue with lip-sync in 8+ languages, ambient sound effects, and background music. The lip-sync quality is solid across English, Mandarin, Japanese, Korean, Spanish, French, German, and Portuguese. Not perfect on every syllable, but good enough that you’re not dubbing over it in post.

90%+ Usable Output Rate. This is the number that should make competitors nervous. Industry averages for AI video generators hover around 20% usable output, meaning creators typically need five generations to get one clip worth keeping. Seedance 2.0 reportedly pushes this to over 90%. For a 90-minute project, that efficiency gap translates to an estimated 80% reduction in wasted credits and production costs.

Character Consistency. Faces, clothing, and even small text stay consistent throughout the video and across multiple shots. This has been the Achilles’ heel of every AI video generator, and early testers say Seedance 2.0 handles it better than any competitor. You upload reference images of your character from multiple angles, and the model maintains their identity across cuts.

Beat-Sync Mode. Upload a music track, and the generated video lands motion and transitions on the beat. No other major generator does this natively. For TikTok and Reels creators who time visuals to trending audio, this feature alone could justify the subscription.

🔍 REALITY CHECK

Marketing Claims: “90%+ usable output rate, reducing production costs by 80%”

Actual Experience: Community reports from early testers on Xiaohongshu (China’s Instagram equivalent) support the high success rate claim. One tester noted “almost no wasted outputs, good framing, good quality.” However, these results come from experienced prompt engineers who understand the @ reference system. First-time users with basic text prompts will likely see lower success rates.

✅ Verdict: The 90% claim appears credible for experienced users, but expect a learning curve before hitting that rate.

2K Resolution and Fast Generation. Videos generate at up to 2K resolution (upscaled from native 1080p), with generation times around 60 seconds for short clips. ByteDance claims 30% faster processing than Seedance 1.0, which translates to roughly 41 seconds for a 5-second 1080p clip.

💰 Seedance 2.0 Review: Pricing Breakdown

Seedance 2.0 isn’t a standalone product. It’s a model within ByteDance’s creative platforms, primarily Dreamina (called Jimeng in China). What you’re paying for is a platform subscription that unlocks Seedance 2.0 alongside other tools.

💰 Monthly Cost Comparison: Seedance 2.0 vs Competitors

Jimeng/Dreamina (Chinese Market): Starting at approximately 69 RMB/month (~$9.60 USD). New users can unlock the model via a 1 RMB (~$0.14) trial and receive approximately 260 daily login credits. This is by far the cheapest entry point.

Dreamina International: Credit-based plans ranging from free (225 daily tokens shared across all tools) to $84/month for heavy production use. The free tier lets you test the model, but a single Seedance 2.0 generation can consume a significant chunk of your daily allowance.

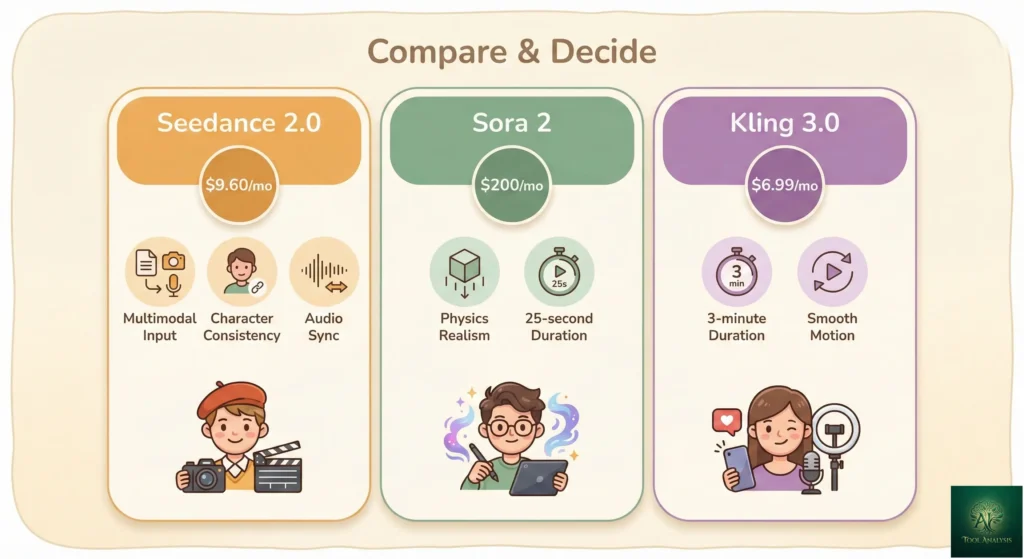

How it compares to competitors: Sora 2 starts at $200/month (ChatGPT Pro) for full 1080p access. The $20/month ChatGPT Plus tier caps video at 720p. Veo 3.1 requires Google AI Ultra at $250/month. Kling AI starts at $6.99/month but offers different capabilities. Seedance 2.0 is positioned as the most accessible option for creator-grade AI video.

The hidden cost advantage: A traditional 5-second VFX shot might cost $400+ and take weeks to produce. Seedance 2.0 can generate a comparable clip for roughly $0.42 in under two minutes. Even accounting for the subscription cost, the economics are dramatically different.

⚔️ Head-to-Head: Seedance 2.0 vs Sora 2 vs Kling 3.0

🎯 Feature Capability Comparison

| Feature | Seedance 2.0 | Sora 2 | Kling 3.0 |

|---|---|---|---|

| Developer | ByteDance | OpenAI | Kuaishou |

| Max Resolution | 2K (upscaled) | 1080p | 4K/60fps |

| Max Duration | 15 seconds | 25 seconds | 2-3 minutes |

| Native Audio | ✅ Yes (8+ languages) | ⚠️ Basic | ✅ Yes |

| Multimodal Input | ✅ 12 files (text/image/video/audio) | ❌ Text + image only | ⚠️ Text + image + motion brush |

| Character Consistency | ⭐ Industry-leading | ✅ Strong | ✅ Good |

| Physics Realism | ✅ Strong | ⭐ Best-in-class | ✅ Strong for human motion |

| Multi-Shot Storytelling | ✅ Automatic | ✅ Storyboard feature | ⚠️ Manual extension |

| Entry Price | ~$9.60/month | $200/month | $6.99/month |

| Free Tier | ✅ Limited credits | ⚠️ 720p only ($20/mo) | ✅ 66 daily credits |

| Beat-Sync | ✅ Native | ❌ | ❌ |

| Best For | Reference-heavy workflows, ads, music videos | Physics-heavy scenes, cinematic realism | Quick social content, longer videos |

💡 Swipe left to see all features →

The honest verdict: No single tool wins everywhere. Seedance 2.0’s multimodal reference system is genuinely unmatched for creators who have specific visual references they want replicated. Sora 2 remains king for physics realism (water, glass, fabric behave more naturally). Kling 3.0 dominates on duration, generating videos up to 3 minutes versus Seedance’s 15-second cap. Many professional creators are using multiple models, choosing the right tool for each specific shot.

👤 Who Should Use This (And Who Shouldn’t)

Use Seedance 2.0 if you:

Create short-form video content for TikTok, Reels, or YouTube Shorts where character consistency across clips matters. Run a marketing agency or e-commerce business needing product videos that maintain brand consistency without expensive photoshoots. Work with reference materials (mood boards, style guides, choreography videos) and want an AI that actually follows them. Need beat-synced video content for music or dance-related projects. Want cinema-quality AI video without the $200-$250/month price tag of Sora 2 or Veo 3.1.

Skip Seedance 2.0 if you:

Need videos longer than 15 seconds in a single clip (choose Kling AI instead, which supports up to 3 minutes). Require an English-language community with extensive tutorials and templates (Runway and Sora have larger Western user bases). Need a built-in video editor for post-production work (Runway’s editing suite is more mature). Work in regions where Chinese platform access is restricted or uncomfortable with data being processed through ByteDance infrastructure. Need absolute physics perfection for glass shattering, liquid dynamics, or fabric simulation (Sora 2 still leads here).

🔑 How to Access Seedance 2.0 Right Now

As of February 2026, Seedance 2.0 is primarily available through Chinese platforms. Here’s the honest breakdown of your options:

Option 1: Jimeng (Dreamina) Platform (Best Full Experience). This is the official home. Navigate to jimeng.jianying.com, create an account, and purchase the 1 RMB trial membership to unlock Seedance 2.0. You’ll need a Chinese phone number (+86) for verification. Daily login gives you approximately 260 free credits. Expect queue wait times of 1-2+ hours during peak hours for free users.

Option 2: Little Skylark (Xiaoyunque) App (Best Free Option). Download the app on iOS or Android, register, and receive approximately 1,200 credits. As of early February 2026, Seedance 2.0 generations on this platform are free (no credit deduction), though this is a limited-time promotion. You must manually select Seedance 2.0 from the model dropdown.

Option 3: Third-Party API Platforms (Easiest for International Users). Platforms like WaveSpeedAI, Atlas Cloud, and Kie AI are integrating Seedance 2.0 into their unified API offerings. This avoids the Chinese phone number requirement but costs more per generation.

Important note for international creators: The global version of Dreamina has not yet integrated the Seedance 2.0 model. ByteDance has confirmed international rollout is coming but hasn’t shared a specific timeline. For now, international users can get similar results (though without the @ reference system) from Veo 3.1 or Sora 2.

⚠️ The Elephant in the Room: Privacy and Ethics

Seedance 2.0 launched with a feature that could generate highly accurate voice characteristics from facial photos alone, without user authorization. ByteDance suspended this feature within days after public backlash, implementing face upload restrictions and requiring live verification before creating digital avatars. The company also introduced content review strengthening to prevent deepfake misuse.

🔍 REALITY CHECK

Marketing Claims: “Commitment to ethical AI development”

Actual Experience: ByteDance moved quickly to suspend the controversial face-to-voice feature and implement safeguards. Credit where it’s due. However, the fact that this capability shipped at all raises legitimate questions about internal review processes. The training data transparency issue (what content was used to train the model) remains completely unaddressed.

✅ Verdict: ByteDance is responsive to criticism but reactive rather than proactive on ethics. Use accordingly.

The broader concern is data sovereignty. Content generated through Jimeng/Dreamina is processed on ByteDance infrastructure. For individual creators making social media content, this likely isn’t a deal-breaker. For enterprise clients handling sensitive brand assets or proprietary content, it’s a legitimate consideration. Chinese AI regulations are evolving rapidly, and ByteDance has been actively complying with government crackdowns on unlabeled AI-generated content.

💬 What Creators Are Actually Saying

Community sentiment is overwhelmingly positive, with some important caveats. On Reddit and creator forums, Seedance 2.0 keeps coming up as “the best AI video generator for character consistency.” Early adopters on Chinese platforms describe being genuinely shocked by the quality.

The positive consensus centers on three points: the @ reference system delivers control that no competitor matches, character consistency across shots actually works (which has been the holy grail of AI video), and the 90%+ success rate dramatically reduces the frustration of wasted generations.

The criticism clusters around accessibility (Chinese phone number required, long queue times), the 15-second duration cap (significantly shorter than Kling’s 3 minutes), and the lack of a built-in editing suite (you’ll still need Premiere, DaVinci Resolve, or CapCut for post-production). Some users also note that while the multimodal reference system is powerful, it has a real learning curve. Mastering the @ command syntax and understanding which references produce the best results takes time.

🔮 The Road Ahead: What’s Next for Seedance

Short-term (1-3 months): International Dreamina rollout with full Seedance 2.0 support. Third-party API availability through platforms like BytePlus. English-language interface improvements and community building. Expect public pricing standardization for international users.

Medium-term (3-6 months): Extended video duration (likely 30-60 seconds per generation). Integration into CapCut, ByteDance’s video editing app used by 1B+ users. API access for developers building on the Volcano Engine platform. Improved physics simulation to close the gap with Sora 2.

Long-term (6-12 months): Extended narrative coherence for 60-180 second continuous scenes. Real-time generation capabilities. Deeper integration with ByteDance’s content ecosystem (TikTok, Douyin). Enterprise-grade features with data residency options for international clients.

🏆 Final Verdict

Seedance 2.0 is not just another AI video generator. It’s a genuine paradigm shift in how creators interact with video generation AI. The move from “type and pray” to “show and direct” via the multimodal reference system is the most significant workflow improvement in this space since Runway introduced video generation. The 90%+ success rate, native audio synchronization, and character consistency make it the first AI video tool that feels genuinely production-ready rather than experimental.

Use Seedance 2.0 if: You have reference materials you want replicated, need character consistency, create short-form content, or want cinema-quality video without Sora 2’s $200/month price tag.

Stick with Kling AI if: You need longer videos (up to 3 minutes), want the cheapest entry point ($6.99/month), or prefer a more mature English-language ecosystem.

Stick with Runway Gen-4 if: You need an integrated editing suite, prioritize Hollywood-grade character consistency, or require established Western enterprise support.

Ready to try it? Start with the Jimeng platform for the full experience, or wait for the international Dreamina rollout expected in late February/early March 2026.

Stay Updated on AI Video Tools

The AI video generation space moves weekly. Don’t miss the next Seedance update, competitor launch, or price drop that could save you hundreds.

- ✅ Breaking tool releases within 24 hours (Seedance updates, Sora launches, Kling features)

- ✅ Price drop alerts when AI video tools add free tiers or cut pricing

- ✅ Head-to-head comparisons with real testing results, not press releases

- ✅ Access guides when international rollouts happen

- ✅ Hands-on testing from our 30-day trials of every major tool

Free, unsubscribe anytime. 10,000+ professionals trust our reviews.

❓ FAQs: Your Questions Answered

Q: Is Seedance 2.0 free to use?

A: Partially. The free tier through Jimeng (Dreamina) gives you approximately 225 daily credits shared across all tools, and the Little Skylark app currently offers free Seedance 2.0 generations during a promotional period. Full access to the multimodal reference system and commercial licensing starts at approximately $9.60/month.

Q: How does Seedance 2.0 compare to Sora 2?

A: Seedance 2.0 wins on multimodal control (12-file input), character consistency, beat-sync, and price ($9.60/month vs $200/month). Sora 2 wins on physics realism, clip duration (25s vs 15s), and Western accessibility. Choose based on your primary workflow need.

Q: Can international users access Seedance 2.0?

A: International access is limited as of February 2026. Options include the Chinese Jimeng platform (requires +86 phone), third-party API providers, or waiting for the international Dreamina rollout expected late February/early March 2026.

Q: What is the maximum video length?

A: Each generation produces 4-15 seconds. The extension feature adds segments, but seams may be visible. For longer continuous videos, Kling AI supports up to 3 minutes.

Q: Is my data safe with Seedance 2.0?

A: Content is processed on ByteDance’s infrastructure. The company has suspended controversial features and added safeguards, but data sovereignty concerns apply for enterprise users. Individual creators making social content face minimal risk.

Q: Does Seedance 2.0 generate audio automatically?

A: Yes. Native audio-visual generation includes dialogue with lip-sync in 8+ languages, ambient sounds, and effects. This eliminates the need for separate tools like ElevenLabs for most use cases.

Q: What about the 90% success rate claim?

A: Early tester reports support it, but mainly for experienced users of the @ reference system. First-time users with basic text prompts will see lower success rates. The industry average is approximately 20%, so even a 60-70% rate would be a major improvement.

Q: Can Seedance 2.0 replace a video production team?

A: Not completely. It handles product demos, social content, and promotional clips well, reducing 60-70% of production work. You still need human direction for complex dialogue, precise lighting, and editorial judgment. Think “assistant director,” not “replacement director.”

📚 Related Reading

- Kling AI Complete Guide 2026: Video Length, Credits, Pricing, Everything

- Runway Gen-4 Review 2025: Hollywood’s $3B AI Video Generator

- Google Flow VEO 3.1 Review 2025: Is It the Sora Killer?

- Kling AI Pricing 2026: Complete Credit Cost Breakdown

- Kling AI Video Length Limits 2026: Free vs Paid Maximum Duration

- Kling AI Review 2025: The Content Creator’s Guide

- ElevenLabs Review 2025: I Cloned My Voice in 5 Minutes

- DaVinci Resolve 20 Review: Free AI Video Editing 2025

- The Complete AI Tools Guide 2025

Last Updated: February 14, 2026 | Seedance Version: 2.0 | Next Review Update: March 14, 2026