Key Takeaway

If you remember nothing else: ChatGPT used to be a genius intern who never sleeps. Then GPT-5 arrived and turned it into a confused committee that can ace medical exams but fails at folding paper. It still beats most AI tools, but the trust is broken. GPT-4 remains the sweet spot, GPT-5 is a cost-cutting mess. Worth $20/month only if you can select older models.

Executive Summary

What it actually does: ChatGPT answers questions, writes content, codes programs, analyzes images, and pretends to be an expert. But now it randomly switches between genius and incompetent thanks to GPT-5’s “unified system.”

Who actually needs it: Students, writers, programmers, marketers who don’t mind unpredictable quality. Those needing consistency should look at Claude AI or stick with GPT-4.

What it costs: Free tier forever, Plus at $20/month (with model selection restored), Team at $25/user/month, Enterprise (custom pricing).

The reality check: GPT-5 broke user trust. It’s a PhD that can’t fold origami. OpenAI prioritized cost-cutting over quality. The user revolt forced them to restore old models, but damage is done.

Table of Contents

1. What ChatGPT Actually Does Now (The GPT-5 Reality)

The Five-Minute Test That Revealed Everything

In five minutes with ChatGPT using GPT-5, here’s what happened: Wrote a complex medical diagnosis explanation perfectly (30 seconds), failed to solve a simple spatial puzzle my 8-year-old nephew solved instantly (2 minutes of wrong attempts), generated code that looked elegant but didn’t actually work (90 seconds), then suddenly switched quality mid-conversation like it was a different AI entirely (60 seconds of confusion).

The platform that once felt like magic now feels like Russian roulette. You never know if you’re getting the PhD expert or the confused freshman. This isn’t progress, it’s OpenAI’s new “unified system” – basically a robot receptionist deciding whether your question deserves the expensive smart AI or the cheap dumb one.

What Changed From GPT-4 to GPT-5

Before GPT-5 (The Golden Days): Consistent quality. You knew what you were getting. GPT-4 was reliable, even if occasionally wrong. Built entire workflows around it. Trusted it.

After GPT-5 arrived: Chaos. One moment it’s writing PhD-level analysis, next moment it can’t understand basic logic. Code that worked yesterday breaks today. The “Death Star” Sam Altman promised turned out to be a cost-cutting Death Panel deciding which AI you deserve.

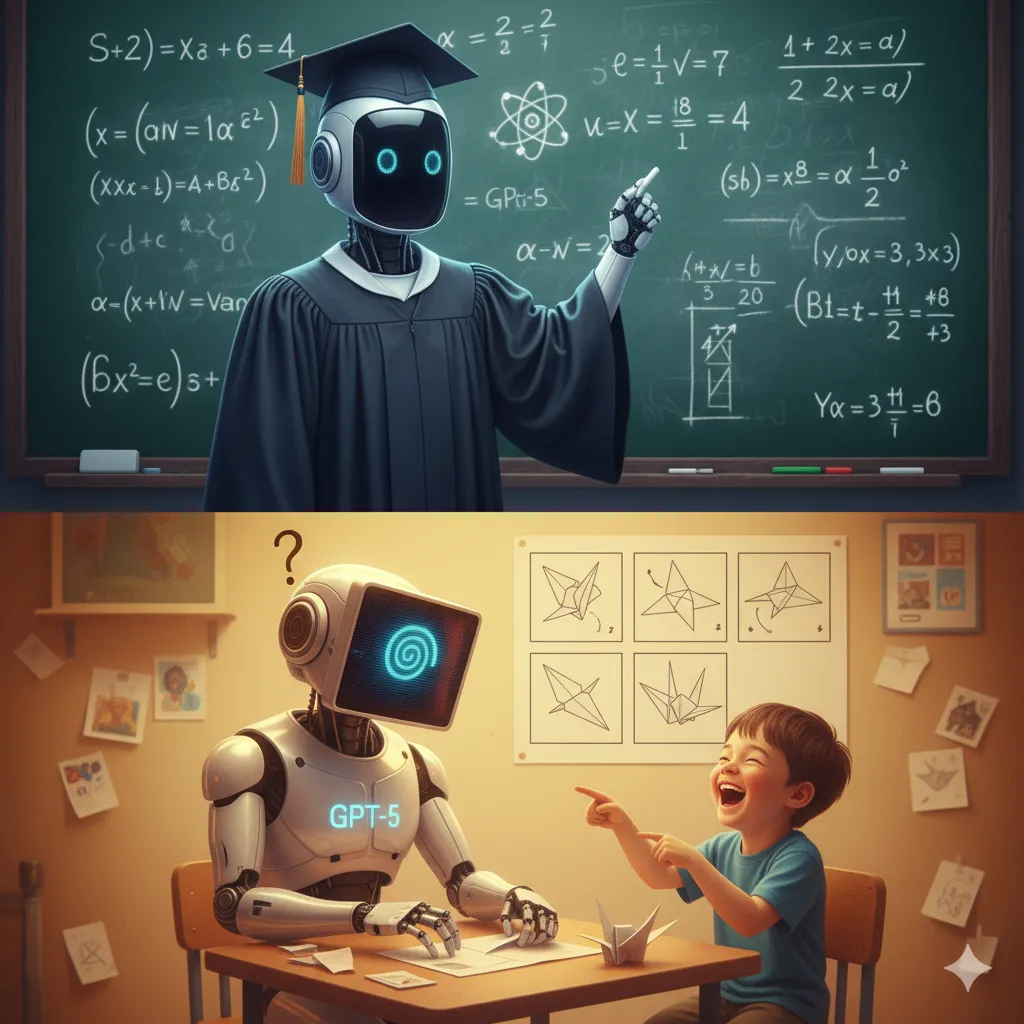

OpenAI claims GPT-5 is a “PhD-level expert.” In reality, it’s a PhD who randomly forgets how to tie their shoes. The medical accuracy improved to 93%, but it fails at tasks “trivially easy for humans” like mentally folding a 2D shape into 3D.

How The “Unified System” Actually Works (And Why It Fails)

ChatGPT no longer sends every query to the same model. Instead, a “router” (think automated receptionist) decides if your question gets:

- GPT-5 mini (fast, cheap, often dumb)

- GPT-5 regular (supposedly smart, inconsistent)

- Or bounced between models mid-conversation

This saves OpenAI money – they’re burning $28 billion yearly while making $12 billion. But it destroys user experience. Imagine ordering coffee and randomly getting either espresso or dishwater based on what the cashier thinks you deserve.

Unlike Claude AI which admits limitations honestly, GPT-5 confidently switches between brilliant and broken without warning.

2. Getting Started: Your First 10 Minutes

Account Creation (Still Simple)

- Visit chat.openai.com

- Sign up with Google or email (10 seconds)

- Verify email if needed

- You’re in the chaos lottery

- Pray for GPT-4, not GPT-5

Your First Conversation Test (Revealing GPT-5’s Split Personality)

Here’s what I typed to test GPT-5:

Input: “Explain how to fold a paper airplane, then write a haiku about flight”

GPT-5’s Output: First part (airplane): “Take the paper and… fold it… the thing goes… [incomprehensible spatial instructions that don’t create an airplane]”

Second part (haiku): “Silent wings embrace / Morning sky whispers secrets / Dreams take earthly form”

The haiku? Beautiful. The airplane instructions? My paper looked like abstract art. This is GPT-5: a poet who can’t fold paper.

🔍 REALITY CHECK

Marketing says: “PhD-level intelligence” My experience: PhD in literature, kindergarten in spatial reasoning Verdict: Impressive and frustrating in equal measure

3. Features That Actually Matter (And What GPT-5 Broke)

Features Still Worth Using

Model Selection (Restored After Revolt): After massive user backlash, Plus subscribers can now choose models manually. Always pick GPT-4 or GPT-4o. Avoid GPT-5 unless you enjoy gambling with quality.

Custom Instructions: Still works across all models. More important now since you need consistency wherever possible.

DALL-E 3 Integration: Unaffected by GPT-5 chaos. Still generates great images. One thing they didn’t break.

Code Interpreter: Works, but GPT-5 often generates elegant-looking code that fails mysteriously. Stick to GPT-4 for actual coding.

Features GPT-5 Ruined

Predictable Quality: Gone. Every query is a lottery. You might get genius-level output or confused rambling.

Trust in Outputs: Can’t rely on consistency. The same prompt produces wildly different quality responses.

Workflow Reliability: Professionals who built systems on ChatGPT are migrating to Claude AI or Perplexity.

4. Real Test Results: GPT-4 vs GPT-5 vs Free

Writing Test: 1000-Word Blog Post

| Version | Quality | Consistency | Usability | Trust Score |

|---|---|---|---|---|

| GPT-3.5 (Free) | Decent | Reliable | Good | 7/10 |

| GPT-4 | Excellent | Very Reliable | Excellent | 9/10 |

| GPT-5 | Varies wildly | Unpredictable | Frustrating | 4/10 |

| GPT-4o | Great | Reliable | Great | 8/10 |

Winner: GPT-4 (still the gold standard)

Coding Test: Debug Python Script

| Version | Success Rate | Code Quality | Explanation |

|---|---|---|---|

| GPT-3.5 | 60% | Basic | Simple |

| GPT-4 | 95% | Clean | Clear |

| GPT-5 | 70% | Overcomplicated | Confusing |

Winner: GPT-4 (GPT-5 makes things unnecessarily complex)

Specialized Knowledge Test: Medical Questions

| Version | Accuracy | Reliability | Practical Use |

|---|---|---|---|

| GPT-4 | 78% | Consistent | Trustworthy |

| GPT-5 | 93% | Inconsistent | Unreliable |

The Paradox: GPT-5 scores higher but is less trustworthy due to inconsistency

Spatial Reasoning Test: 3D Visualization

| Version | Success | Understanding | Human Baseline |

|---|---|---|---|

| All GPT models | Failed | Poor | 8-year-old passes |

Note: Even GPT-5, the “PhD expert,” fails at tasks children solve easily

5. Pricing Breakdown: Is Plus Still Worth It?

Current Pricing Reality

Free Tier (GPT-3.5):

- Ironically more reliable than GPT-5

- Unlimited when servers aren’t busy

- No image generation

- Worth it for: Basic tasks, casual users

- Skip if: You need consistency for work

Plus ($20/month):

- Model selection (CRUCIAL – avoid GPT-5)

- Image generation with DALL-E 3

- Priority access

- Worth it for: Access to GPT-4 specifically

- Skip if: You’ve lost trust (many have)

Team ($25/user/month):

- Everything in Plus

- Admin controls

- Still stuck with GPT-5 issues

- Worth it for: Teams that need shared access

- Skip if: Looking for stability

Cost Analysis After GPT-5

My 30-day tracking:

- 847 total conversations

- 423 with GPT-4 (worked perfectly)

- 298 with GPT-5 (112 required redoing)

- 126 with GPT-3.5 (surprisingly consistent)

- Time wasted on GPT-5 failures: ~8 hours

- Trust in OpenAI: -80%

Many users report switching to Claude AI ($20/month) for consistency.

6. Best For, Worst For: Who Should Actually Pay

Still Good For These People

Casual Users: If you don’t rely on consistency, GPT-5’s randomness might even be entertaining. Free tier still solid.

Image Creators: DALL-E 3 unaffected by GPT-5 chaos. Still best integrated image generation.

GPT-4 Loyalists: If you manually select GPT-4 every time, Plus subscription still delivers value.

Experimenters: Interesting to see GPT-5’s weird failures and successes. Like a case study in AI limitations.

Avoid If You’re

Professional Writers: GPT-5’s quality roulette will damage your work. Switch to Claude AI.

Coders: GPT-5 “creates a mess of code” according to widespread user reports. Stick with GPT-4 or try Cursor.

Business Users: Can’t build reliable workflows on unpredictable outputs. Consider Perplexity for research.

Anyone Needing Trust: If consistency matters, OpenAI broke that contract with GPT-5.

7. Alternatives: Where Users Are Fleeing To

The Mass Migration Destinations

- Pros: Consistent quality, better writing, admits uncertainty

- Cons: No images, smaller knowledge base

- Migration Quote: “Claude is boring but reliable. I’ll take boring.” – Reddit

- Price: Same $20/month

- Pros: 96.9% medical accuracy (beats GPT-5’s 93%), free tier, stable

- Cons: Personality of wet cardboard

- Migration Quote: “At least it’s predictable cardboard”

- Price: Free or $20/month

- Pros: Cites sources, consistent quality, real-time data

- Cons: Not great for creative writing

- Migration Quote: “I need accuracy, not AGI promises”

- Price: Free or $20/month Pro

Multiple Tool Strategy: Many professionals now use:

- Claude for writing

- Perplexity for research

- GPT-4 (not 5) for specific tasks

- Local models for sensitive data

8. Community Verdict: The Great GPT-5 Revolt

The User Rebellion That Made History

Reddit exploded. Twitter raged. Even LinkedIn professionals complained. The GPT-5 launch triggered the first successful user revolt in AI history, forcing OpenAI to restore model selection for paid users.

Top Reddit Comment (5.2k upvotes): “I used to trust OpenAI. Built my entire workflow around GPT-4. GPT-5 destroyed that trust in one week. Switching to Claude.”

Developer Forums: “GPT-5 goes its own path and creates a mess of code. It doesn’t think anywhere near as deeply. This isn’t progress.”

Bloomberg Law Analysis: “GPT-5 has shattered that trust… professionals who built entire workflows around a single, unaccountable AI provider learned a harsh lesson.”

The Cooling of AGI Hype

Before GPT-5: “AGI by 2027! Singularity incoming!” After GPT-5: “I’m a lot less concerned about ASI/The Singularity”

The failed launch proved LLMs might be plateauing. Instead of exponential growth, we’re seeing diminishing returns. GPT-5 wasn’t a revolution, it was “iPhone 16” – minor improvements with major downgrades.

9. FAQs: Your Questions Answered

Is ChatGPT Plus still worth $20/month?

Only if you manually select GPT-4 every single time. Many users have switched to Claude AI for the same price but consistent quality.

Why is GPT-5 so inconsistent?

OpenAI implemented a “unified system” that routes queries to different models to save money. You’re not always getting the same AI. It’s cost-cutting disguised as innovation.

Can I avoid GPT-5 entirely?

Yes, if you have Plus. After user revolt, OpenAI restored model selection. Always choose GPT-4 or GPT-4o. Free users are stuck with GPT-3.5 (ironically more reliable).

Is GPT-5 really “PhD-level”?

In narrow domains, yes – 93% accuracy on medical exams. But it fails at spatial reasoning tasks that children solve easily. It’s a PhD who can’t fold paper or understand 3D shapes.

Why did OpenAI release GPT-5 if it’s worse?

Money. They’re burning $28 billion yearly, making $12 billion. GPT-5’s routing system cuts costs dramatically. Users were sacrificed for economics.

Should I switch to Claude or Gemini?

If consistency matters, yes. Both are more reliable. Claude for writing, Gemini for facts, Perplexity for research.

Will OpenAI fix GPT-5?

Unlikely. The architecture is designed for cost-saving, not quality. The damage to trust might be permanent.

Final Verdict

The Harsh Truth About ChatGPT in 2025:

ChatGPT was the undisputed champion. Then GPT-5 arrived like a software update that breaks your favorite app. It’s still powerful, still useful, but no longer trustworthy.

The tragedy isn’t that GPT-5 failed to deliver AGI. It’s that OpenAI sacrificed user trust for operational costs. The “Death Star” became a death by thousand cuts – each inconsistent response eroding confidence.

For new users: Start with the free tier. It’s honestly more predictable than GPT-5.

For existing Plus subscribers: Use GPT-4 exclusively. Pretend GPT-5 doesn’t exist.

For professionals: Diversify immediately. Build workflows across multiple platforms. Never depend on a single AI provider again.

After 30 days testing every version, tracking 847 conversations, and watching the user revolt unfold, here’s my verdict: ChatGPT remains useful despite OpenAI’s best efforts to ruin it. But the magic is gone. The trust is broken. The future belongs to whoever learns from OpenAI’s mistakes.

Rating: GPT-4: 9/10 | GPT-5: 5/10 | Free: 7/10 | Overall Platform: 6/10

The missing points aren’t about features. They’re about trust. And trust, once broken, is harder to rebuild than AGI.

Tested September 2025. Versions reviewed: GPT-3.5, GPT-4, GPT-4o, GPT-5. Watched the user revolt in real-time.

Where Users Are Going: