🆕 Latest Update (December 3, 2025): This post has been updated with the latest Claude Opus 4.5 benchmarks (80.9% SWE-bench record), November 2025 competitor updates (GitHub Copilot Agent Mode, Cursor 2.0 with Composer model, Windsurf Gemini 3 Pro integration), and current pricing information.

Breaking News (November 24, 2025): Anthropic just released Claude Opus 4.5, claiming it’s “the best model in the world for coding, agents, and computer use.” The numbers back this up—but what does this mean for Claude Code users paying $20-$200/month?

The Game-Changing Numbers

SWE-bench Verified Performance (Real-World Coding):

- Claude Opus 4.5: 80.9% (NEW RECORD)

- GPT-5.1 Codex Max: 77.9%

- Gemini 3 Pro: 76.2%

- Claude Sonnet 4.5: 77.2%

What This Means: Claude Opus 4.5 is the first AI model to break 80% accuracy on SWE-bench Verified, which tests real-world GitHub bug fixing. This isn’t a marginal improvement—it’s a clear 3-5 percentage point lead over every competitor.

Even More Impressive: Opus 4.5 scored higher on Anthropic’s internal engineering exam than any human candidate ever has. Let that sink in.

The Catch: Claude Opus 4.5 is only available to Claude Max subscribers ($100-$200/month). Pro plan users ($20/month) still use Claude Sonnet 4.5, which while excellent (77.2%), isn’t the record-breaking model.

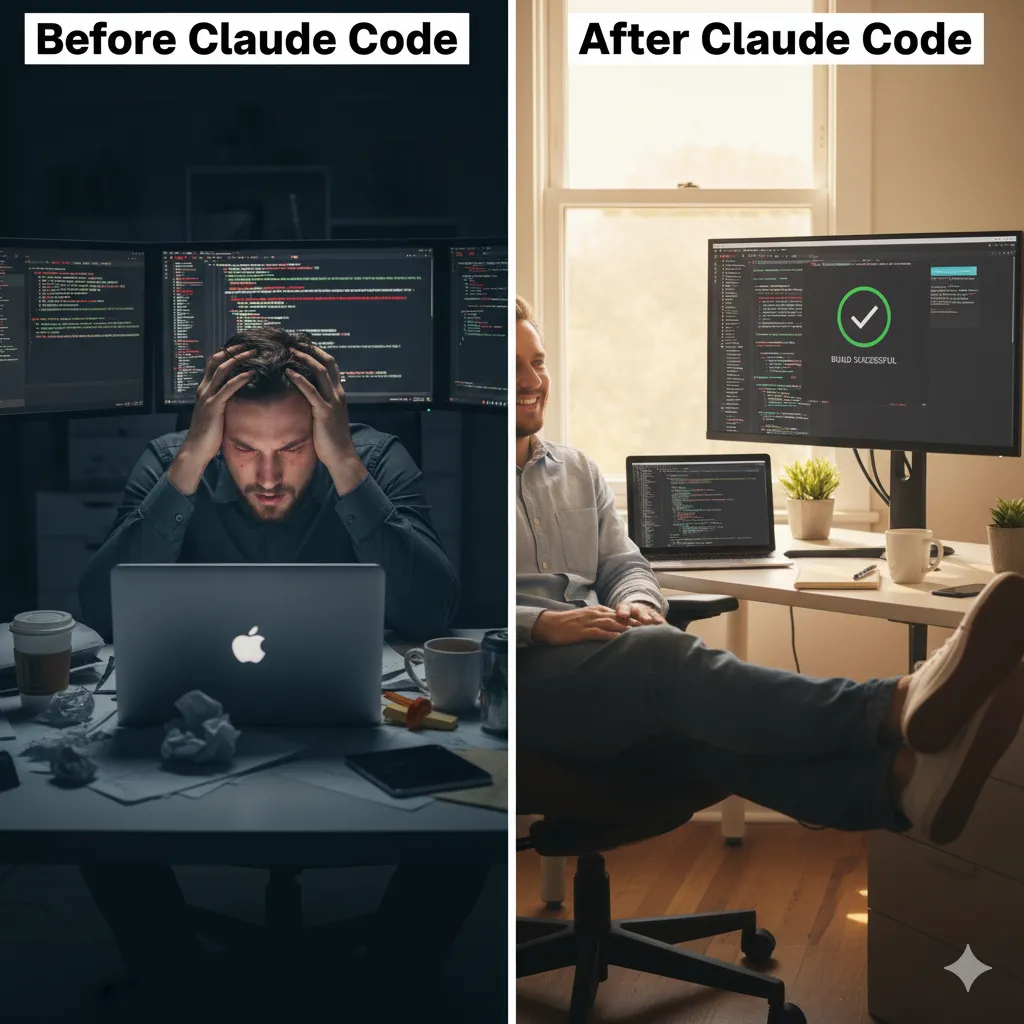

If you remember nothing else: Claude Code is basically having a skilled developer living in your terminal who’s read your entire codebase. With Claude Opus 4.5’s record 80.9% accuracy on real-world coding tasks, it can build features from descriptions, debug issues, navigate any codebase, and automate tedious tasks—all without leaving your command line. Best for experienced developers working on multi-file projects. The $20-$200/month cost pays for itself if you save 2+ hours monthly. But don’t expect it to architect entire applications from vague ideas. The 80% accuracy means you’ll always need to review code.

What Changed in November-December 2025: The AI Coding Wars Heat Up

The AI coding assistant market exploded in the last two months. Here’s what you need to know about how the competition responded to Claude Code:

1. GitHub Copilot Agent Mode (October-November 2025)

- Agent Mode now generally available in VS Code with autonomous multi-file editing.

- Plan Mode launched for creating step-by-step implementation plans.

- GPT-5.1 and GPT-5.1-Codex integration with priority processing.

- Project Padawan announced: Future autonomous agent that handles entire tasks.

- Pricing still competitive: $10/month (unchanged).

- Reality Check: GitHub Copilot is catching up fast. Agent Mode + Plan Mode puts it much closer to Claude Code’s workflow than the old autocomplete-only experience.

2. Cursor 2.0 with Composer Model (November 2025)

- Cursor launched version 2.0 with their first proprietary coding model: Composer.

- Parallel agent execution: Run up to 8 agents simultaneously on different tasks.

- Agent planning with to-do lists for better visibility.

- Memories feature (GA): Persistent context across sessions.

- Pricing unchanged: Pro $20/month (Unlimited).

- Reality Check: Cursor 2.0 is the strongest direct competitor. The $20/month unlimited usage vs Claude’s usage limits is a significant differentiator.

3. Windsurf (Codeium) Updates (November 2025)

- Gemini 3 Pro integration and GPT-5.1 models available.

- Auto-generate memories toggle for context management.

- Claude 4 BYOK support (Bring Your Own API Key).

- Pricing remains aggressive: Free/$0, Pro/$15.

- Reality Check: Windsurf continues to offer the best price-to-feature ratio, but lost native Claude API access (now BYOK only). Still compelling for budget-conscious developers.

Key Takeaway: Claude Code’s advantage has narrowed significantly. Opus 4.5’s 80.9% accuracy is the best available, but competitors have closed the feature gap.

What the Research Actually Shows About AI Coding Tools

AI Coding Accuracy by Task Difficulty

Terminal-Bench results show dramatic accuracy drop on complex tasks (Source: Independent benchmarks)

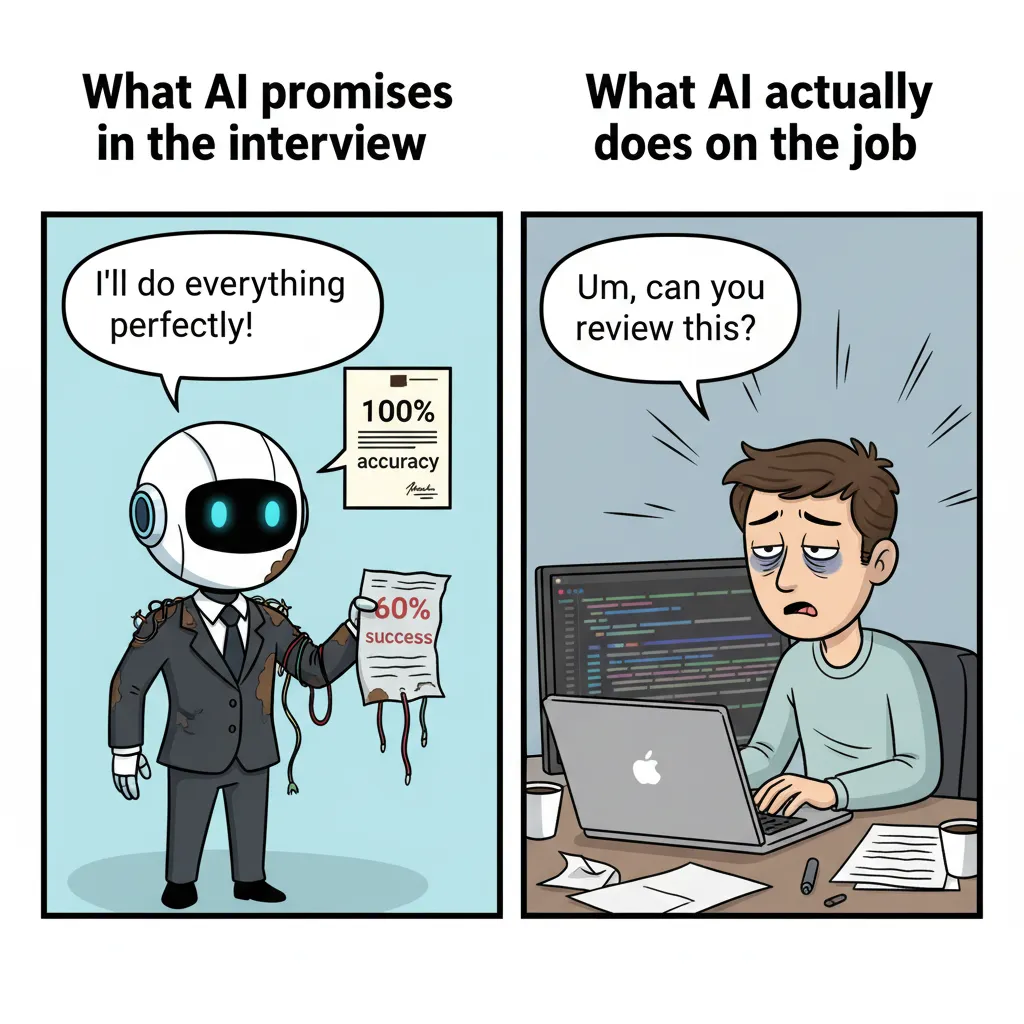

Key Insight: Even the best AI coding tools achieve only 60% overall accuracy on this benchmark, dropping from 65% on easy tasks to just 16% on hard tasks. This highlights why human review is always necessary.

Before diving into Claude Code specifically, let’s ground expectations in data.

Independent Terminal-Bench testing of AI models on 80 terminal-based coding tasks reveals that state-of-the-art models achieve around 60% accuracy overall, with performance dropping sharply from 65% on easy tasks to just 16% on hard ones. Translation: AI coding assistants excel at straightforward tasks but struggle with complex problems.

Common AI coding failure patterns include not waiting for processes to finish, crashing terminals, and missing edge cases. If you’ve experienced these frustrations with other tools, you’re not alone—it’s a fundamental limitation of current AI technology.

What developers actually want to delegate: A survey of 481 professional programmers found that developers most want to delegate writing tests and documentation—the tasks nobody enjoys but everyone needs. Currently, 84.2% of developers use AI coding tools, with ChatGPT (72.1%) and GitHub Copilot (37.9%) leading adoption.

The repository-level challenge: Research shows that even GPT-4 only achieves 21.8% success rate on repository-level code generation—working with existing projects that have dependencies and context. However, when AI agents use external tools to navigate codebases, performance improvements range from 18.1% to 250%.

This context matters because Claude Code takes the agent-based approach.

Table of Contents

Click any section to jump directly to it

- 🔥 The AI Coding Wars: What Changed in Nov/Dec 2025

- 📊 Research Context: AI Coding Tool Limitations

- 🤖 1. What Claude Code Actually Does

- ⚙️ 2. How Claude Code Works: The Technical Reality

- 💰 3. Pricing Breakdown: Calculate Your Real Costs (Dec 2025)

- 🧮 4. Real Cost Calculator: Is It Worth It?

- 👨💻 5. What Developers Really Use It For

- 🔑 6. Features That Actually Matter

- ⚖️ 7. Detailed Comparison: Claude Code vs Competitors (Dec 2025)

- 🔄 8. Migration Guides: Switching From Other Tools

- 🎯 9. Best For, Worst For: Should You Use This? (Dec 2025)

- 🚀 10. Installation & Setup: The Real Process

- 🔧 11. Troubleshooting: Common Issues & Solutions

- 💬 12. Community Verdict: What Developers Really Think

- 📊 13. The Benchmark Reality Check

- ❓ 14. FAQs: Your Questions Answered

- 📅 15. Recent Updates & 2026 Roadmap

1. What Claude Code Actually Does (Not What Anthropic Claims)

Claude Code is Anthropic’s agentic coding tool that lives in your terminal, powered by Claude Sonnet 4.5 (Pro users) or the record-breaking Claude Opus 4.5 (Max users). Forget the marketing speak. Here’s what it actually does:

The Core Capability: Claude Code maintains awareness of your entire project structure, can find up-to-date information from the web, and can pull from external datasources like Google Drive, Figma, and Slack through MCP (Model Context Protocol).

What This Means in Practice: According to developers at Puzzmo who’ve used it extensively, Claude Code “has considerably changed my relationship to writing and maintaining code at scale. The ability to instantly create a whole scene instead of going line by line is incredibly powerful.”

Another experienced developer describes it more pragmatically: “With Claude Code, I am in reviewer mode more often than coding mode, and that’s exactly how I think my experience is best used.”

The Surprise Factor: Unlike ChatGPT where you copy-paste code back and forth, Claude Code can directly edit files, run commands, and create commits. It always asks for permission before modifying files, and you can approve individual changes or enable “Accept all” mode for a session.

🔍 REALITY CHECK

- Marketing says: “Revolutionary agentic coding assistant”

- Real experience: “I’ve found it to sit somewhere at the stage of ‘Post-Junior’—there’s a lot of experience there and energy, but it doesn’t really do a good job remembering things you ask.”

- Verdict: Powerful but requires active steering from experienced developers

2. How Claude Code Works: The Technical Reality

The Basic Workflow: Claude Code reads your files as needed—you don’t have to manually add context. When you start an interactive session with the claude command, it can access your codebase automatically.

Key Technical Capabilities:

- File Operations: Has powerful tools including file reading, writing, code navigation, and execution capabilities. Permission rules can be configured to control what Claude can access.

- Context Management: Built-in automatic context compaction and management to ensure the agent doesn’t run out of context window.

- Tool Integration: Can run custom commands before or after any tool executes using hooks. For example, automatically running a formatter after modifying files.

- Web Search: Can find up-to-date information from the web during coding sessions.

Architecture Insight: One developer notes: “Claude Code is likely post-trained with the same tools that it currently uses. It’s just more comfortable in the current harness. It manages context better—I think it manages tokens more efficiently.”

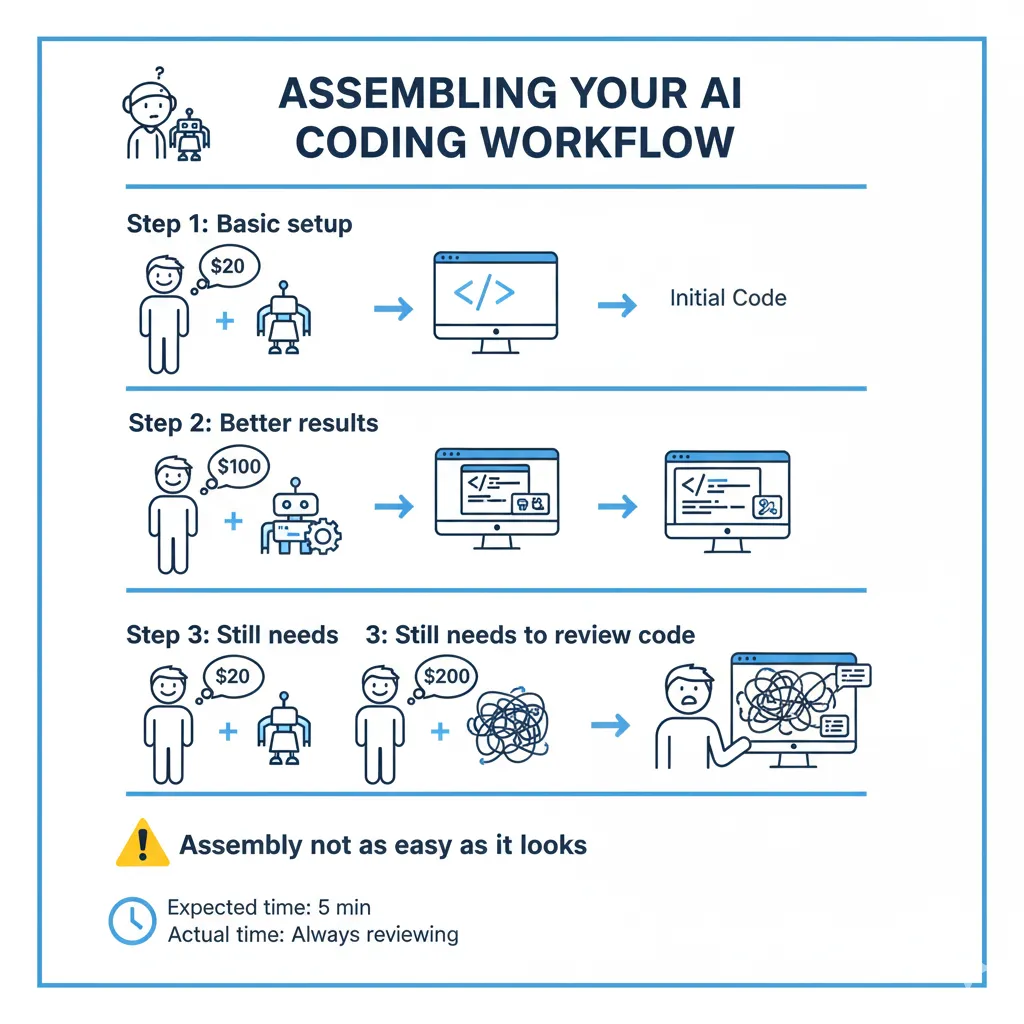

3. Pricing Breakdown: What You’ll Actually Pay (Dec 2025)

Monthly Cost Comparison: AI Coding Tools (Dec 2025)

What you actually pay for different usage levels and models

Cost Reality (Dec 2025): To access the best model (Opus 4.5, 80.9% accuracy), you must pay $100-$200/month for Claude Max. At $20/month, Claude Pro has usage limits, while Cursor Pro offers unlimited usage.

Claude Code still requires a paid Claude subscription. The free plan does not support Claude Code access—you need at least a Pro subscription or API credits.

Current Pricing (December 2025):

Claude Pro: $20/month

- Access to Claude Sonnet 4.5 (77.2% SWE-bench)

- Approximately 45 messages or 10-40 prompts every 5 hours

- Does NOT include Opus 4.5

- Ideal for light coding tasks, repositories under 1,000 lines of code

- Annual billing ($200 upfront) saves $36/year

Claude Max 5x: $100/month

- Full access to Claude Opus 4.5 (80.9% SWE-bench record)

- 5x higher usage limits than Pro

- Early access to features, priority access during high traffic

- Handles professional development with moderate to large projects and extended coding sessions

Claude Max 20x: $200/month

- Full access to Claude Opus 4.5

- 20x Pro usage limits

- For developers on extensive, multi-day coding sessions

The Hidden Cost Reality

Usage Limits Reality: Usage limits reset every 5 hours and are shared across ALL Claude applications (web, mobile, desktop, Claude Code). This is crucial: your Claude Code usage competes with your regular Claude.ai usage.

Example scenario: You spend 2 hours using Claude.ai for research (20 messages), then switch to Claude Code for coding. You only have 25 messages left for the next 3 hours on the Pro plan.

Competitive Pricing Analysis (December 2025)

| Tool | Monthly Cost | Usage Limits | Best Model Access |

|---|---|---|---|

| Claude Code (Pro) | $20 | 45 msg/5hr (shared) | Sonnet 4.5 only |

| Claude Code (Max 5x) | $100 | 225 msg/5hr (shared) | Opus 4.5 ✅ |

| Claude Code (Max 20x) | $200 | 900 msg/5hr (shared) | Opus 4.5 ✅ |

| Cursor Pro | $20 | Unlimited | Composer + GPT-5 |

| Cursor Ultra | $200 | Unlimited (20x requests) | All models |

| GitHub Copilot | $10 | Unlimited | GPT-5.1 Codex |

| Windsurf Pro | $15 | Credit-based | Gemini 3 Pro |

The Bottom Line on Pricing (December 2025):

- To get the record-breaking Opus 4.5 model, you must pay $100-$200/month.

- At $20/month, you’re using Sonnet 4.5—but with usage limits, while Cursor provides unlimited access at the same price.

Competitor advantage: GitHub Copilot at $10/month with unlimited usage and Agent Mode is now a viable alternative for many use cases. Cursor at $20/month with unlimited usage and parallel agents may offer better value for heavy users.

4. Real Cost Calculator: Is It Worth It For Your Workflow?

Calculate Your Actual Cost Per Hour

Light Use (10 hours coding/week):

- Pro Plan: $20/month ÷ 40 hours = $0.50 per hour

- Best for: Weekend projects, learning, side gigs

Medium Use (20 hours coding/week):

- Max 5x: $100/month ÷ 80 hours = $1.25 per hour

- Best for: Full-time developers on personal projects

Heavy Use (40 hours coding/week):

- Max 20x: $200/month ÷ 160 hours = $1.25 per hour

- Best for: Professional developers, team leads

ROI Calculation: When Does It Pay For Itself?

Interactive ROI Calculator

Calculate whether Claude Code pays for itself in your workflowYour Hourly Rate ($)Coding Hours per WeekEstimated Hours Saved per MonthClaude Code Plan Cost ($/month)

Monthly ROI

$300

3.0x return on investment

✓ Claude Code pays for itself. You save $300/month in value.

If you make $50/hour:

- Save 2 hours/month → Value: $100 → ROI on Pro plan: 5x return

- Save 5 hours/month → Value: $250 → ROI on Max plan: 2.5x return

If you make $100/hour:

- Save 2 hours/month → Value: $200 → ROI on Pro plan: 10x return

- Save 3 hours/month → Value: $300 → ROI on Max plan: 3x return

If you make $150/hour:

- Save 2 hours/month → Value: $300 → ROI on Max 20x: 1.5x return

- Save 4 hours/month → Value: $600 → ROI on Max 20x: 3x return

Real Developer Time Savings Reported:

From documented experiences:

Test Writing: “Saves 2-3 hours per feature on comprehensive test suites”

- Monthly savings for 4 features: 8-12 hours

- Value at $100/hr: $800-$1,200

Debugging: “Fixed authentication bug in 3 minutes that would have taken 45 minutes”

- Average debugging sessions: 5-10 per week

- Time saved per week: 3-5 hours

- Monthly value at $100/hr: $1,200-$2,000

Decision Framework (Updated Dec 2025):

Consider Pro ($20/mo) if:

- You code <15 hours/week

- Your hourly rate is <$75

- You primarily work on small codebases (<1,000 lines)

- Note: Cursor Pro ($20/mo) may offer better value due to unlimited usage.

Choose Max 5x ($100/mo) if:

- You need access to the best model (Opus 4.5)

- You code 20-30 hours/week professionally

- Your hourly rate is $75-$150

- You need reliable access without frequent limit-hitting

Choose Max 20x ($200/mo) if:

- You need access to the best model (Opus 4.5)

- You code 40+ hours/week

- Your hourly rate is $150+

5. What Developers Really Use It For

Based on the survey of 481 professional developers, the most popular AI assistant use cases are: implementing new features (87.3%), writing tests (75%), refactoring (75%), and generating documentation (75%).

Real-World Applications from Developer Reports:

✅ Implementing New Features (87.3% use AI for this) A staff engineer at Sanity reports: “Today, AI writes 80% of my initial implementations while I focus on architecture, review, and steering multiple development threads simultaneously.”

✅ Writing Tests (What Developers Want to Delegate Most) Developers find writing tests the least enjoyable activity. The same engineer notes incorporating tests means “documented functions and thorough tests are more likely to exist in the initial implementation.”

✅ Refactoring Legacy Code One Go developer shares: “Making it through the loop faster—having Claude create a naive implementation fast so I can verify this is the correct path or not, then make improvements once I have more confidence.”

✅ Debugging Claude Code can “analyze your codebase, identify the problem, and implement a fix” when you describe a bug or paste an error message.

⚠️ Where It Struggles: Terminal-Bench shows AI models achieve only 16% accuracy on hard debugging tasks, which aligns with reports of struggles on complex architectural problems. Also, memory limitations persist: “It doesn’t really do a good job remembering things you ask (even via CLAUDE.md).”

6. Features That Actually Matter

Codebase Context Understanding ⭐⭐⭐⭐⭐

What it does: Claude Code reads your files as needed—you don’t have to manually add context.

Why it matters: Research proves this is where agent-based tools show 18-250% improvement over basic LLMs. The ability to understand your entire project structure means generated code actually fits your architecture.

Plan Mode ⭐⭐⭐⭐⭐

What it does: Separates research and analysis from execution. Claude won’t edit files or run commands until you approve the plan.

Why it matters: One developer explains: “I almost always keep Claude Code in Plan Mode until I’m ready to execute an idea. Iterating on a design without getting caught up in small implementation details saves a lot of time.”

Direct File Editing ⭐⭐⭐⭐

What it does: Can directly edit files, run commands, and create commits.

Permission system: Always asks for permission before modifying files. You can approve individual changes or enable “Accept all” mode.

IDE Integration ⭐⭐⭐⭐

What it does: Works with VS Code (including Cursor, Windsurf, VSCodium) and JetBrains IDEs with features like interactive diff viewing, selection context sharing, and quick launch.

Note: A new native VS Code extension is available (launched Oct 2025), providing a graphical interface without requiring terminal familiarity.

MCP (Model Context Protocol) Integration ⭐⭐⭐⭐

What it does: Lets Claude read design docs in Google Drive, update tickets in Jira, or use custom developer tooling.

Two Features That Sound Better Than They Are:

1. “Perfect Memory” Reality: “It doesn’t really do a good job remembering things you ask (even via CLAUDE.md).” You’ll need to repeat context often. Competitors like Cursor now offer superior “Memories” features for persistent context.

2. “Unlimited Context” Reality: While it has “automatic context compaction,” you’re still bound by Claude’s context window. Large codebases require strategic focus.

7. Detailed Comparison: Claude Code vs Major Competitors (Dec 2025)

Feature Comparison: Claude Code vs Top Competitors (Dec 2025)

Scored across 6 key dimensions (higher is better)

Competitive Position (Dec 2025): Claude Code leads on raw Performance (Accuracy with Opus 4.5) but lags on Price Value and Ease of Use. Copilot has caught up significantly with Agent Mode. Cursor 2.0 offers the best balance of advanced features (Parallel Agents, Memories), usability, and unlimited usage at $20.

The competitive landscape changed dramatically in November 2025. While Claude Opus 4.5 leads on accuracy (80.9%), competitors like GitHub Copilot and Cursor have closed the feature gap with new agent modes and proprietary models.

Updated Competitive Landscape: December 2025

| Feature | Claude Code | Cursor 2.0 | GitHub Copilot | Windsurf | Aider |

|---|---|---|---|---|---|

| 💰 Pricing | $20-$200/mo | $20-$200/mo | $10/mo | $0-$15/mo | Free (API costs) |

| 🤖 Best Model | Opus 4.5 (80.9%) (Max plans only) | Composer + GPT-5 | GPT-5.1 Codex (77.9%) | Gemini 3 Pro (76.2%) | BYO Model |

| 🧠 Context Awareness | Full codebase | Full codebase | Multi-file (Agent Mode) | Full codebase | Full codebase |

| 📈 Usage Limits | ⚠️ 45 msg/5hr (Pro) | ✅ Unlimited ($20) | ✅ Unlimited | ⚠️ Credit-based | API limits |

| 💻 Interface | Terminal + VS Code | GUI/IDE | IDE integrated | GUI/IDE | Terminal |

| ⚡ Agent Mode | ✅ Built-in | ✅ Parallel (8x) | ✅ Yes (Nov 2025) | ✅ Cascade | ✅ Built-in |

| 📋 Plan Mode | ✅ Yes | ✅ With to-dos | ✅ Yes (Nov 2025) | ⚠️ Limited | ⚠️ Limited |

| 💾 Persistent Memory | ⚠️ Limited (CLAUDE.md) | ✅ Memories (GA) | ❌ No | ✅ Auto-generate | ❌ No |

| 🔄 Multi-file Refactoring | ✅ Yes | ✅ Yes | ✅ Agent Mode | ✅ Yes | ✅ Yes |

| 📚 Learning Curve | Steep (Terminal) | Easy | Easy | Easy | Moderate |

| 🎯 Best For | Highest accuracy needs | Unlimited usage & GUI | GitHub ecosystem | Budget-conscious | CLI power users |

💡 Swipe left to see all features →

Quick Takeaway (December 2025): Claude Code (Max plans) offers the highest accuracy (80.9%) but at a premium ($100-200/mo) with usage limits. Competitors have significantly closed the feature gap. Cursor 2.0 ($20/mo) offers unlimited usage, parallel agents, and a great GUI. GitHub Copilot ($10/mo) now has Agent Mode, making it a strong budget contender.

8. Migration Guides: Switching From Other Tools

Switching AI coding tools requires adapting your workflow. Here’s how to transition smoothly to Claude Code’s terminal-native, agentic approach.

Migrating from GitHub Copilot

The Mental Shift: Move from primarily “instant suggestion while typing” (autocomplete) to “conversational pair programmer” (agentic). While Copilot now has Agent Mode, Claude Code’s workflow is inherently agent-first.

- Copilot Workflow: Write code → Accept suggestion → Repeat. (Or use new Agent Mode chat).

- Claude Code Workflow: Describe task → Review plan → Approve execution → Review result.

What You Gain:

- More robust codebase awareness.

- More mature agentic features (MCP, Subagents).

- Higher accuracy on complex tasks (with Opus 4.5/Max plan).

What You Lose:

- Instant inline autocomplete.

- $10/month unlimited usage (Claude Code is $20+ with limits).

Transition Tips:

- Start with well-defined tasks: “Add a new API endpoint for X” instead of relying on autocomplete mid-function.

- Use Plan Mode (Shift+Tab+Tab): Get used to reviewing the approach before execution.

- Keep Copilot Active (Optional): Many developers use both—Copilot for instant autocomplete, Claude Code for complex tasks.

Migrating from Cursor/Windsurf (GUI Agents)

The Mental Shift: Move from a visual IDE experience to a terminal-based workflow.

- Cursor/Windsurf Workflow: Highlight code → Chat in sidebar → Apply changes visually.

- Claude Code Workflow: Run `claude` in terminal → Converse → Review diffs in IDE (if integrated).

What You Gain:

- Access to Opus 4.5 (highest accuracy, if on Max plan).

- Terminal-native experience (zero context switching for CLI users).

- Powerful hooks system for automation.

What You Lose:

- Polished GUI and visual editing experience.

- Unlimited usage at $20 (Cursor Pro).

- Parallel agent execution (Cursor 2.0).

- Superior Persistent Memories feature (Cursor/Windsurf).

Transition Tips:

- Set up IDE integration immediately: This bridges the gap by allowing diff viewing in your familiar editor.

- Use CLAUDE.md: Replicate persistent context features by documenting project conventions in `.claude/CLAUDE.md`.

- Try the VS Code Extension: If the terminal is too jarring, the new VS Code extension offers a GUI alternative.

Migrating from ChatGPT (Copy-Paste)

The Mental Shift: Move from manual context management and copy-pasting to an integrated agent.

- ChatGPT Workflow: Copy code → Paste into chat → Describe issue → Copy response → Paste back into IDE.

- Claude Code Workflow: Describe task in terminal → Claude reads context automatically → Edits files directly.

What You Gain:

- Massive time savings (no more copy-pasting).

- Automatic context awareness (knows your entire project).

- Direct file editing and command execution.

What You Lose:

- Free access (if using free ChatGPT).

Transition Tips:

- Trust the context: Stop pasting code snippets. Just describe what you need and let Claude find the relevant files.

- Start small and review carefully: Get comfortable with direct file editing by starting with small changes and reviewing the diffs.

9. Best For, Worst For: Should You Actually Use This? (Dec 2025)

The competitive landscape has shifted significantly. Here is the updated decision framework for who should use Claude Code in December 2025:

✅ Choose Claude Code Max ($100-200/mo) if:

- You need the absolute best accuracy: Opus 4.5’s 80.9% SWE-bench score is the industry leader. If that extra 3-5% accuracy matters for mission-critical code, this is the tool.

- Your hourly rate is $150+: The ROI justifies the premium cost if you save 4+ hours monthly.

- You prefer terminal-native workflow: Claude Code is built for developers comfortable in the terminal.

- You value Anthropic’s approach: If safety research and responsible AI development matter to you.

- Budget allows professional tooling: You view this as an investment in productivity.

⚠️ Consider Alternatives ($10-20/mo) if:

Choose GitHub Copilot ($10/mo) if:

- You’re deeply integrated with the GitHub ecosystem.

- The new Agent Mode capabilities (launched Nov 2025) meet your needs.

- Budget is the primary constraint.

- You want unlimited usage without worrying about limits.

Choose Cursor Pro ($20/mo) if:

- You want unlimited usage at the $20 price point.

- Parallel agent execution (8x simultaneous tasks) is appealing.

- Persistent Memories feature is valuable for long-term projects.

- You prefer a GUI experience over the terminal.

Choose Windsurf Pro ($15/mo) if:

- Budget is tight but you want agentic features.

- Gemini 3 Pro performance is sufficient for your tasks.

- Credit-based pricing model works better for your usage patterns.

❌ Skip Claude Code Pro ($20/mo) if:

The value proposition for the Pro plan has weakened significantly compared to competitors:

- You’re paying the same as Cursor but getting usage limits: Cursor offers unlimited usage at $20/mo.

- You don’t specifically need Sonnet 4.5: Competitors offer comparable models (Composer, GPT-5.1).

- Unlimited usage matters more than marginal accuracy gains.

- You’ll hit the 45 message/5hr limit regularly: This disrupts flow state and reduces productivity.

❌ Worst For (General):

1. Beginners Learning to Code

- Research shows junior developers benefit most from tools with visual feedback.

- Better alternative: Cursor’s GUI or ChatGPT with explanations.

2. Developers Wanting Minimal Oversight

“You have now become part code reviewer and part product manager. The code is no longer yours, BUT it’s still your responsibility to ensure whatever goes into master is of high enough quality.” If you’re not prepared for this role shift, Claude Code will frustrate you.

10. Installation & Setup: The Real Process

System Requirements:

macOS 10.15+, Ubuntu 20.04+/Debian 10+, or Windows 10+ (with WSL 1, WSL 2, or Git for Windows)

Installation Methods:

Method 1: npm (Traditional) Run npm install -g @anthropic-ai/claude-code. Do NOT use sudo as this can lead to permission issues and security risks.

Method 2: Native Install (Beta) For macOS, Linux, WSL:

curl -fsSL https://claude.ai/install.sh | bash

Authentication:

When you start an interactive session with the claude command, you’ll need to log in. Once logged in, credentials are stored and you won’t need to log in again.

Common Issues:

WSL Users: If you encounter OS/platform detection issues during installation, WSL may be using Windows npm. Try installing with npm install -g @anthropic-ai/claude-code --force --no-os-check (Do NOT use sudo).

If you see “exec: node: not found,” your WSL environment may be using a Windows installation of Node.js. Confirm with which npm and which node—they should point to Linux paths starting with /usr/ rather than /mnt/c/.

11. Troubleshooting: Common Issues & Solutions

Installation Problems

Issue: “exec: node: not found” error on WSL

Cause: WSL is using Windows installation of Node.js instead of Linux version

Solution:

# Check which Node you're using

which npm

which node

# Should show /usr/ paths, not /mnt/c/

# If showing Windows paths, install Node via Linux package manager:

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.39.0/install.sh | bash

nvm install --lts

Usage & Performance Issues

Issue: Constantly asking for permission

Problem: Every file change requires manual approval, disrupting flow

Solution:

# Enable auto-accept mode for session:

# In Claude Code, type: /config

# Or use "Accept all" mode when prompted

Warning: Only use auto-accept on trusted codebases you control

Issue: “Usage limit reached” mid-session

Problem: Hit the 45-message limit on Pro plan during intensive coding

Solutions:

- Immediate: Switch to another tool temporarily (Copilot, Cursor, ChatGPT)

- Long-term: Upgrade to Max 5x or 20x if this happens weekly, or switch to Cursor Pro for unlimited usage at $20.

Issue: Claude Code “forgets” previous instructions

Problem: Doesn’t remember context you provided earlier in session

Solutions:

- Use CLAUDE.md file: Create

.claude/CLAUDE.mdwith permanent project context - Use checkpoints: Save conversation state at key points (

/checkpoint) - Start fresh: Fork conversation with ESC+ESC when context gets messy

Developer tip: “I treat Claude Code like a junior dev who reset their memory each morning. Always restate the important parts.”

Model & Performance Issues

Issue: Claude Code gives worse results than Claude.ai chat

Possible causes:

- Insufficient context: Terminal environment has less context than you manually provided in chat

- Different system prompts: Claude Code optimized for actions, not explanations

- Model differences: Ensure you are using the same model. If you use Opus 4.5 on web but only have Pro plan for Code, you are using Sonnet 4.5 in Code.

12. Community Verdict: What Developers Really Think

The Overwhelmingly Positive:

From Puzzmo’s engineering team after 6 weeks: “Claude Code has considerably changed my relationship to writing and maintaining code at scale. I feel like I have a new freedom of expression which is hard to fully articulate.”

From a developer who created 12 projects: “It’s allowed me to write ~12 programs/projects in relatively little time… Most of them, I wouldn’t even have bothered to write without Claude Code, simply because they’d take too much of my time.”

The Realistic Concerns:

Context and Memory Issues: “It doesn’t really do a good job remembering things you ask (even via CLAUDE.md).”

Iteration Required: “Keep in mind that the model is often incorrect on the first proposal. Expect to iterate in Plan Mode before coding and again even after code is written.”

Role Shift Required: “I’m more critical of ‘my code’ now because I didn’t type out a lot of it. No emotional attachment means better reviews.”

The “Post-Junior Developer” Consensus:

Multiple developers describe Claude Code as performing at a “Post-Junior” level: “There’s a lot of experience there and a whole boat-load of energy, but it doesn’t really do a good job remembering things.”

13. The Benchmark Reality Check

Repository-Level Coding Performance Improvement

Agent-based tools vs. base LLMs on complex coding tasks (Source: Academic research)

Research Finding: Agent-based tools like Claude Code show 18-250% performance improvement over base LLMs on repository-level tasks. This is why context awareness matters for multi-file projects.

AI Coding Tool Adoption Among Developers

Survey of 481 professional programmers (Source: Sergeyuk et al., 2024)

Industry Trend: 84.2% of developers now use AI coding assistants, with ChatGPT (72.1%) and Copilot (37.9%) leading. The question isn’t “should I use AI?” but “which tool fits my workflow?”

What Independent Testing Actually Shows

SWE-bench Performance (The New Gold Standard): The November 2025 release of Claude Opus 4.5 set a new record with 80.9% accuracy on SWE-bench Verified. This is a measurable lead over GPT-5.1 Codex Max (77.9%).

Terminal-Bench Performance: Independent benchmarks testing AI models on 80 terminal-based coding tasks found that state-of-the-art models achieve around 60% accuracy overall, with performance varying dramatically by task difficulty—65% on easy tasks, dropping to just 16% on hard ones.

What This Means For You:

- Easy tasks (simple scripts, basic functions): High success rate expected (80%+)

- Medium tasks (adding features, writing tests): Good results, always review output

- Hard tasks (complex debugging, architecture): Improved performance with Opus 4.5, but still requires significant human oversight. Use as assistant not replacement.

The Bottom Line: Claude Opus 4.5 is the most accurate model available, but no AI tool is perfect. Claude Code succeeds when:

- Tasks are well-defined

- You provide clear context

- You review and test all output

- You treat it as a pair programmer in “reviewer mode,” not a replacement

14. FAQs: Your Questions Answered

Is there a free version of Claude Code?

Quick Answer: No. Claude Code requires at least a Pro subscription ($20/month).

Free alternatives: Aider (pay only API costs) or Windsurf (generous free tier).

How does Claude Code compare to GitHub Copilot (Dec 2025)?

The gap has narrowed significantly with the launch of Copilot Agent Mode:

Claude Code: Higher accuracy with Opus 4.5 (80.9% vs 77.9%)—but only on $100+ plans. More mature agentic features. Usage limits.

Copilot: $10/month unlimited usage. Instant autocomplete (which Claude lacks). New Agent Mode and Plan Mode.

Choose Claude Code if: You need the absolute best accuracy (and pay for Max) or prefer terminal workflow.

Choose Copilot if: You value unlimited usage, low cost, instant autocomplete, and GitHub integration.

How does Claude Code compare to Cursor 2.0?

Cursor 2.0 is the strongest direct competitor.

Claude Code: Higher raw accuracy (Opus 4.5 on Max plans). Terminal-native. Usage limits.

Cursor 2.0: Unlimited usage at $20/mo (better value than Claude Pro). GUI-based. Parallel agent execution (8x tasks). Persistent Memories feature.

Choose Cursor if: You prefer GUI, need unlimited usage at $20, or value parallel agents and persistent memory.

15. Recent Updates & 2026 Roadmap

November/December 2025 (Major Updates)

Claude Opus 4.5 Launch (Nov 24)

- New flagship model released, setting record 80.9% on SWE-bench.

- Available only to Max subscribers ($100-200/month).

Competitor Landscape Shift

- GitHub Copilot: Launched Agent Mode and Plan Mode.

- Cursor 2.0: Released Composer model and parallel agent execution.

- Windsurf: Integrated Gemini 3 Pro.

October 2025

Native VS Code Extension (Beta)

- New GUI option launched.

What’s Coming in 2026: The Roadmap

Claude Code (Anthropic’s Plans):

- Potential Claude Opus 5 or Sonnet 5 models.

- Enterprise team features.

GitHub Copilot (Confirmed Roadmap):

- Project Padawan: Fully autonomous agent.

- Open sourcing Copilot in VS Code.

Cursor (Expected Direction):

- Deeper infrastructure integrations.

- Enhanced Composer model.

The Industry Trend

Every major player is moving toward the same feature set. The differentiation is narrowing to:

- Model quality (Claude Opus 4.5 currently leads at 80.9%)

- Pricing/limits (Copilot cheapest, Cursor best unlimited value)

- Workflow preference (Terminal vs GUI)

The Final Verdict: December 2025

Claude Code with Opus 4.5 is technically the most accurate AI coding assistant available (80.9% SWE-bench), but the competitive landscape has changed dramatically in late 2025.

The Truth:

- Opus 4.5 is best-in-class but requires $100-200/month.

- The accuracy gap is narrowing (3% difference vs GPT-5.1 Codex).

- Usage limits remain a significant pain point vs competitors’ unlimited offerings.

- Competitors (GitHub Copilot, Cursor 2.0) added agent modes that close the workflow gap.

Decision Framework (December 2025):

Pay $100-200/month for Claude Code Max if: You need the absolute best accuracy available, work on mission-critical code, and your hourly rate justifies the premium.

Pay $20/month for Cursor instead of Claude Code Pro if: You want unlimited usage, parallel agents, and persistent memories without usage limits. (Cursor currently offers better value at the $20 price point).

Pay $10/month for GitHub Copilot if: You’re integrated with GitHub, Agent Mode meets your needs, and budget is a constraint.

The math changed: Claude Code was clearly ahead 6 months ago. Today, it’s the most accurate option, but competitors offer compelling trade-offs. Choose based on whether that 3-5% accuracy gain is worth the premium cost and usage limits for your specific work.

Last updated: December 3, 2025. Major updates include Claude Opus 4.5 launch (Nov 24), GitHub Copilot Agent Mode (Nov), Cursor 2.0 with Composer (Nov), and Windsurf Gemini 3 Pro integration (Nov). Bookmark this page for monthly updates.

Found this updated analysis helpful? Share your experiences with Claude Code vs competitors in the comments. Are you sticking with Claude Code after the competitive updates? Did you switch? Your data helps other developers decide.

The Complete AI Tools Guide 2025

AI Tool Analysis – Expert Reviews & Comparisons of AI Tools 2025

1 thought on “Claude Code Review 2025: The Reality After Claude Opus 4.5 Release”